Aws Hadoop Jar

–hadoop02corejar •Add this jar file to your project class path Warning!.

Aws hadoop jar. To import the libraries into a Maven build, add hadoopaws JAR to the build dependencies;. That's interesting For failure on 122GB file data , Can you check if you have copied required jars to all nodes You should be able to do hadoop fs ls on s3 file system from all nodes. This is a step by step guide to install a Hadoop cluster on Amazon EC2 I have my AWS EC2 instance ecapsoutheast1computeamazonawscom ready on which I will install and configure Hadoop, java 17 is already installed In case java is not installed on you AWS EC2 instance, use below commands.

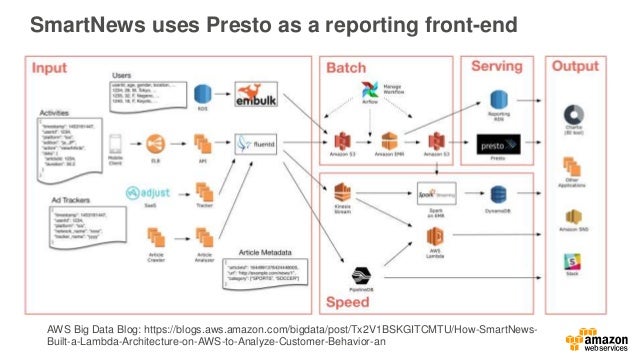

Hello, We thought that it might be helpful to post a write up on how to use DistributedCache on Amazon Elastic MapReduce DistributedCache is a mechanism that hadoop provides to distribute application specific, readonly files across all instances of the cluster The DistributedCache can help serve the following purposes Setup your hadoop application specific binaries If your hadoop. PS I'm using hadoop 102 as myapp is configured to use it Replies 3 Pages 1 Last Post Sep 25, 14 227 AM by JacoV@AWS. Emrdynamodbconnector Access data stored in Amazon DynamoDB with Apache Hadoop, Apache Hive, and Apache Spark Introduction You can use this connector to access data in Amazon DynamoDB using Apache Hadoop, Apache Hive, and Apache Spark in Amazon EMR.

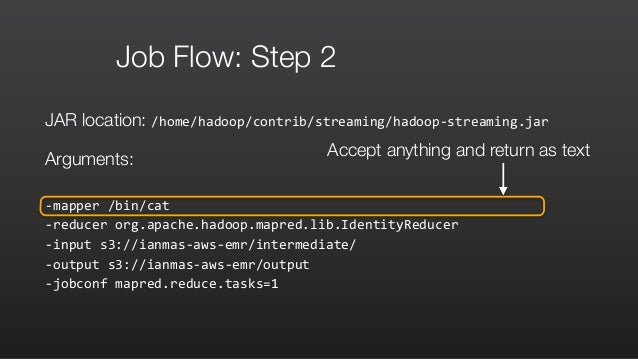

HowTo AWS CLI Elastic MapReduce Custom JAR Job Flow HowTo AWS CLI Elastic MapReduce HBase Posted by Eric Dowd T howto awsclielasticmapreduce aws cli elasticmapreduce. Apache Hadoop Amazon Web Services Support This module contains code to support integration with Amazon Web Services It also declares the dependencies needed to work with AWS services Central (42). Emrdynamodbconnector Access data stored in Amazon DynamoDB with Apache Hadoop, Apache Hive, and Apache Spark Introduction You can use this connector to access data in Amazon DynamoDB using Apache Hadoop, Apache Hive, and Apache Spark in Amazon EMR.

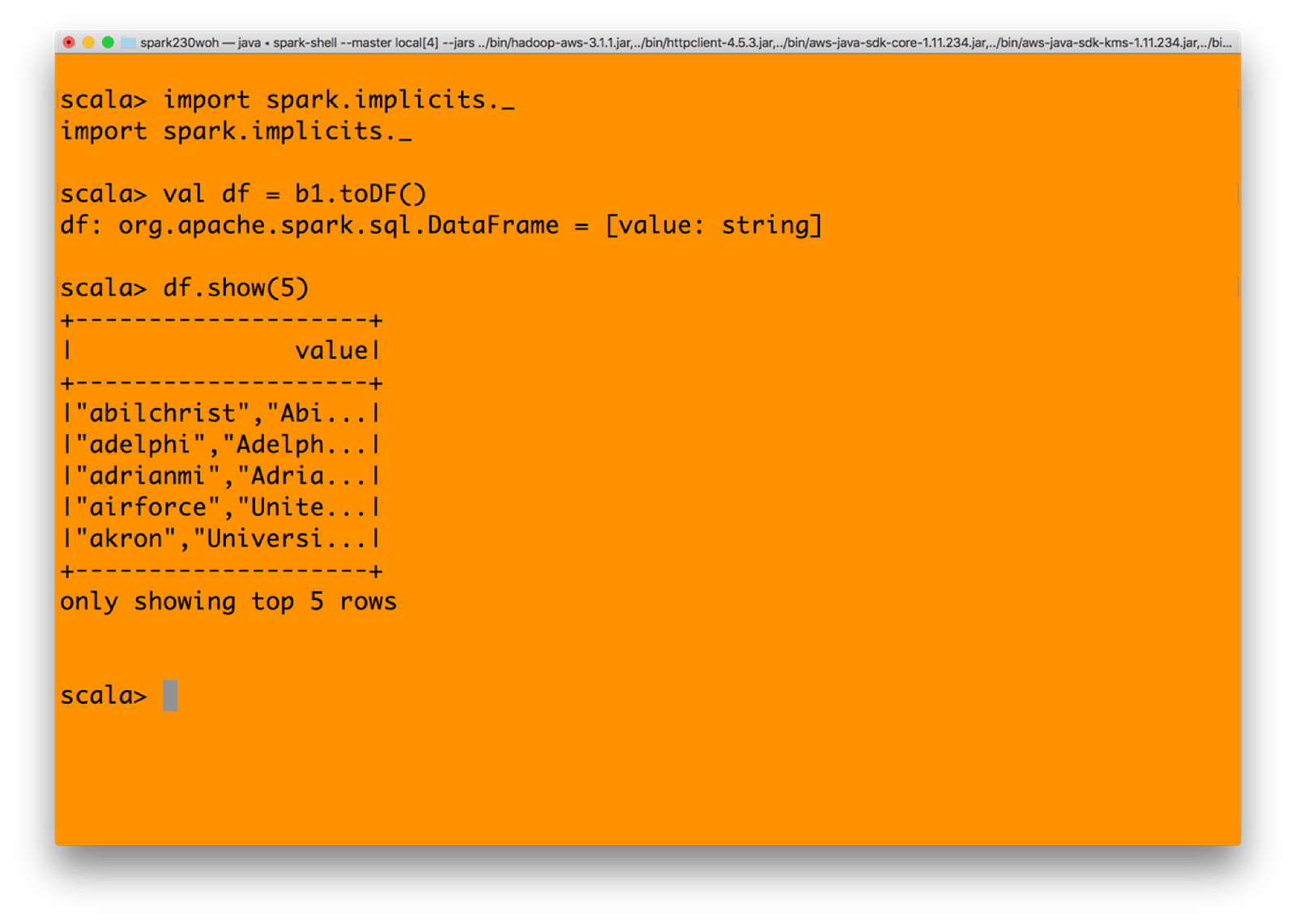

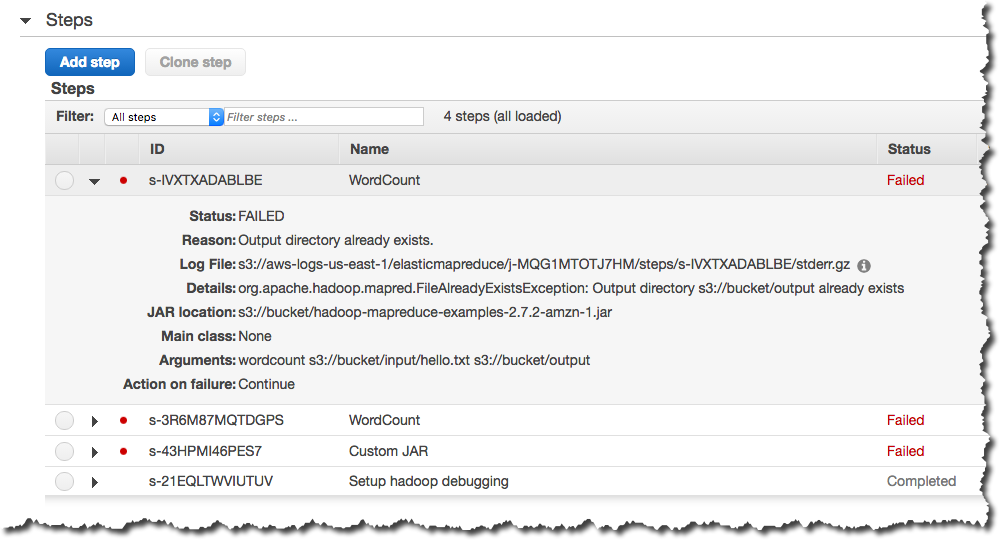

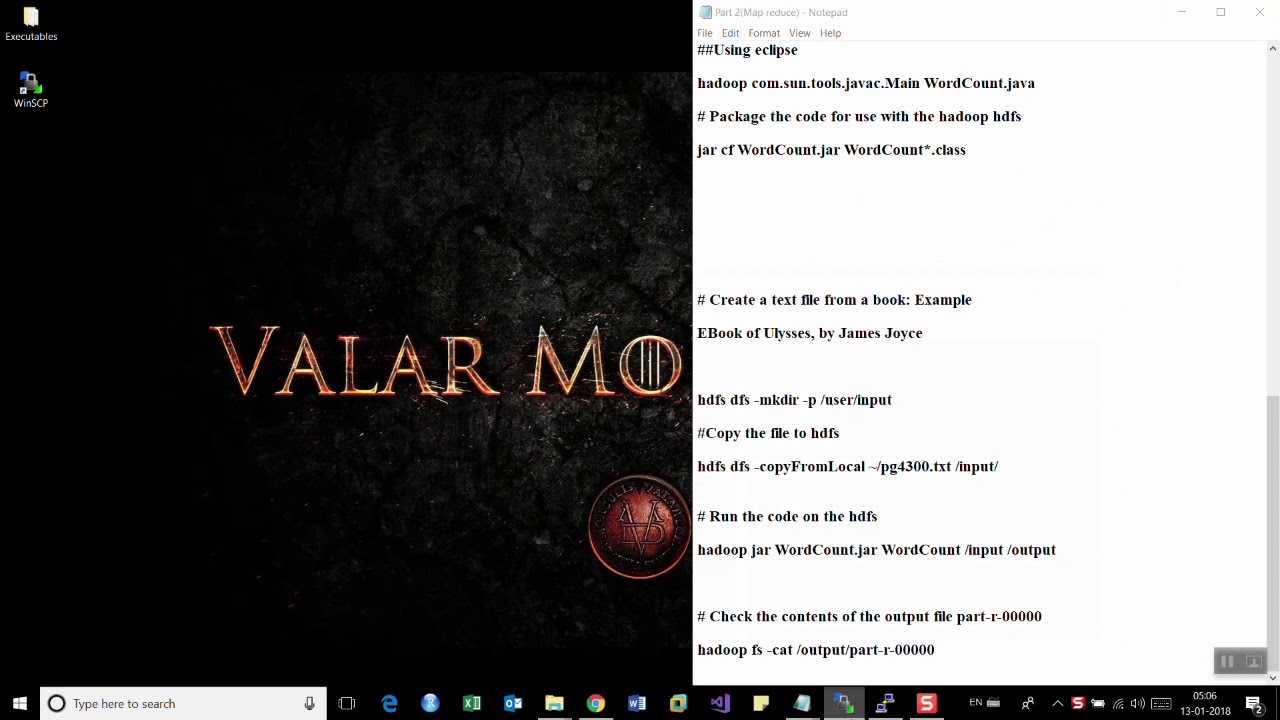

11 As a last step Go to file>Export>Java>JAR file, then select your file and give it a name, and specific location You need this location, because you will upload this JAR to S3 later 12 To Run this file in AWS we follow steps from 1 to 4 that we mentioned in First Using AWS word count program. Apache Spark and Hadoop on an AWS Cluster with Flintrock Part 4 $ ls hadoop scalademo_211jar spark Submitting with sparksubmit Finally, to actually run our job on our cluster, we must. Run a JAR file on Amazon EMR which I created using Hadoop 2 7 5 0 votes I found that Amazon EMR has changed it's hadoop version from 273 to 2 and there is no option for 275.

Randomly changing hadoop and aws JARs in the hope of making a problem “go away” or to gain access to a feature you want, will not lead to the outcome you desire Tip you can use mvnrepository to determine the dependency version requirements of a specific hadoopaws JAR published by the ASF. MapReduce (with HDFS Path) hadoop jar WordCountjar WordCount /analytics/aws/input/resultcsv /analytics/aws/output/1 MapReduce (with S3 Path) hadoop jar WordCount. •A collection of services that together make up a cloud computing platform.

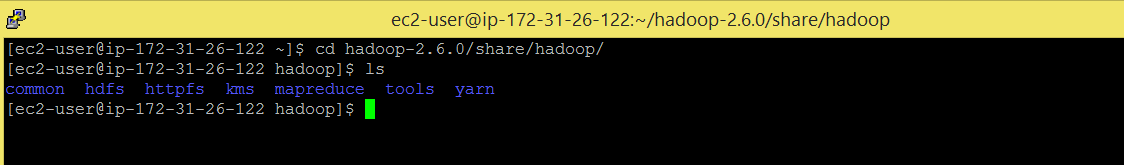

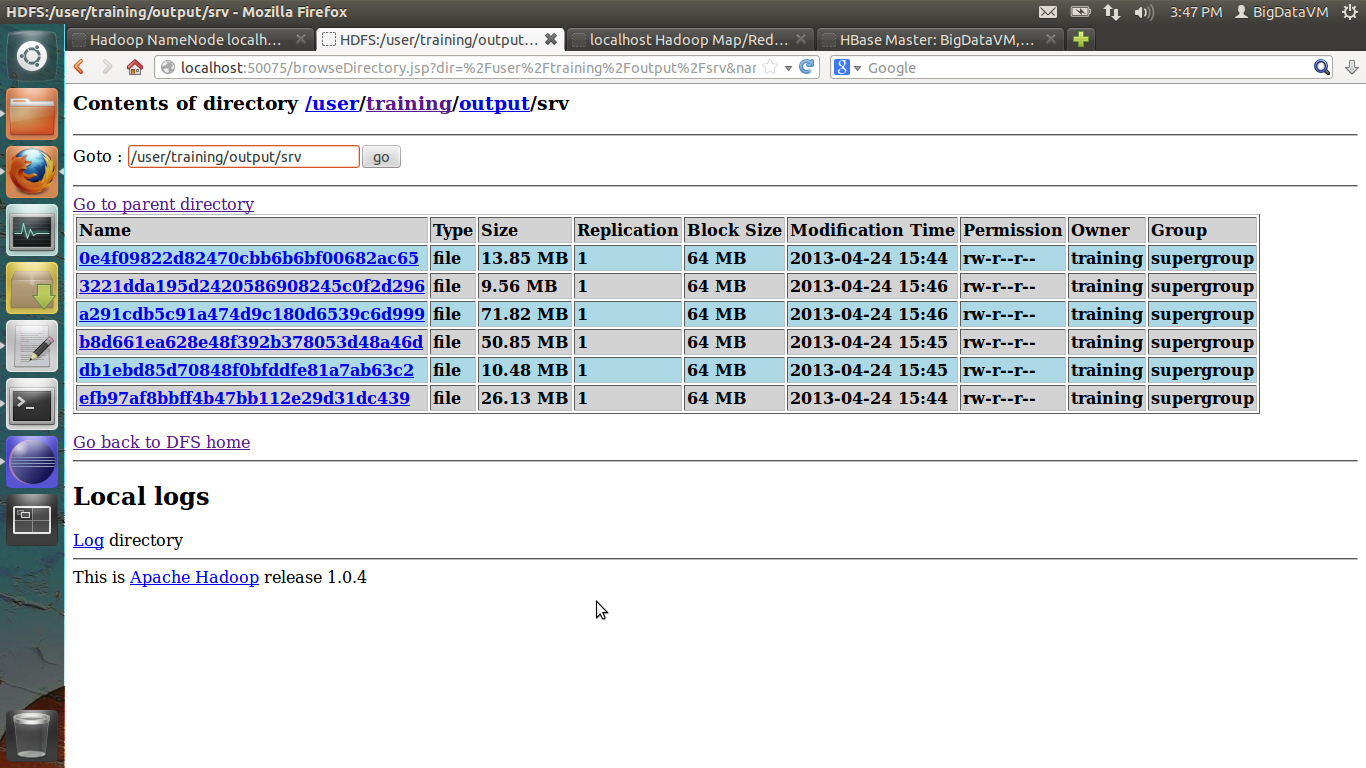

1 Login to our Hadoop cluster on AWS cloud (for free) 2 Try HDFS 3 Run a MapReduce job Login to our Hadoop cluster on AWS cloud (for free) You would need keys to login to our cluster Sign up and get you the keys if you don’t have the keys yet Follow the instructions below once you have the keys Host IP –. Run a JAR file on Amazon EMR which I created using Hadoop 2 7 5 0 votes I found that Amazon EMR has changed it's hadoop version from 273 to 2 and there is no option for 275. The problem is that S3AFileSystemcreate() looks for SemaphoredDelegatingExecutor(comgooglecommonutilconcurrentListeningExecutorService) which does not exist in hadoopclientapi311jar What does exist is SemaphoredDelegatingExecutor(orgapachehadoopshadedcomgooglecommonutilconcurrentListeningExecutorService) To work around this issue I created a version of hadoopaws311.

MapReduce (with HDFS Path) hadoop jar WordCountjar WordCount /analytics/aws/input/resultcsv /analytics/aws/output/1 MapReduce (with S3 Path) hadoop jar WordCount. Cloud, ec2, aws, hadoop, elastic mapreduce, amazon elastic mapreduce, jar, mapreduce, hadoop word count Published at DZone with permission of Muhammad Khojaye , DZone MVB See the original. The S3A connector is implemented in the hadoopaws JAR If it is not on the classpath stack trace Do not attempt to mix a "hadoopaws" version with other hadoop artifacts from different versions They must be from exactly the same release Otherwise stack trace The S3A connector is depends on AWS SDK JARs.

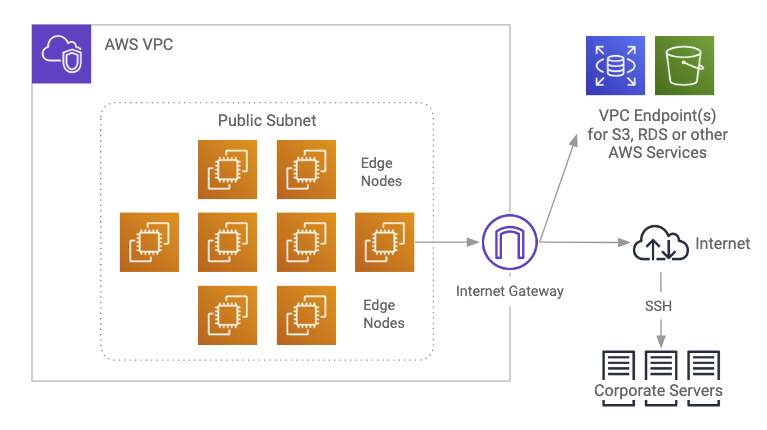

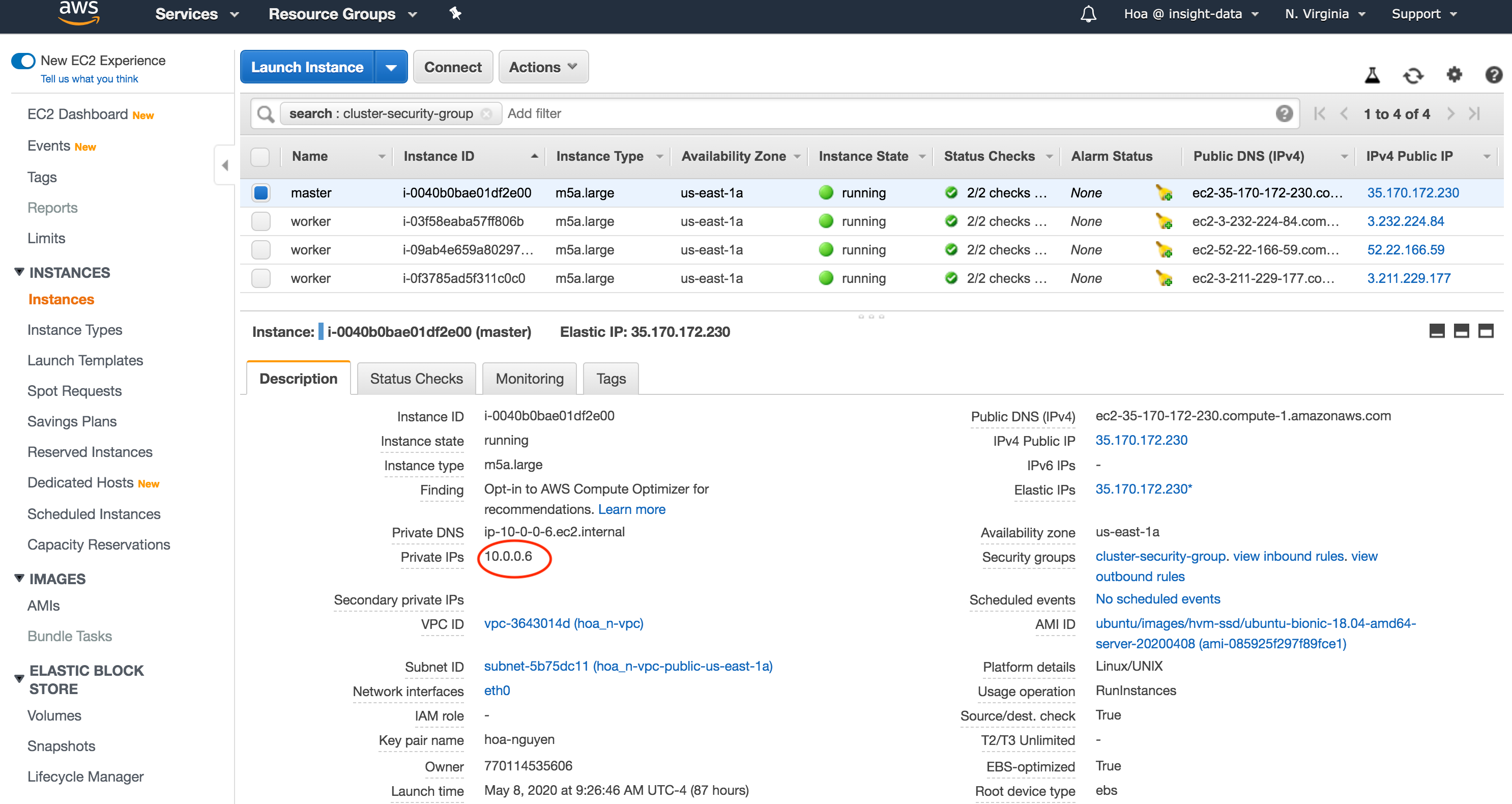

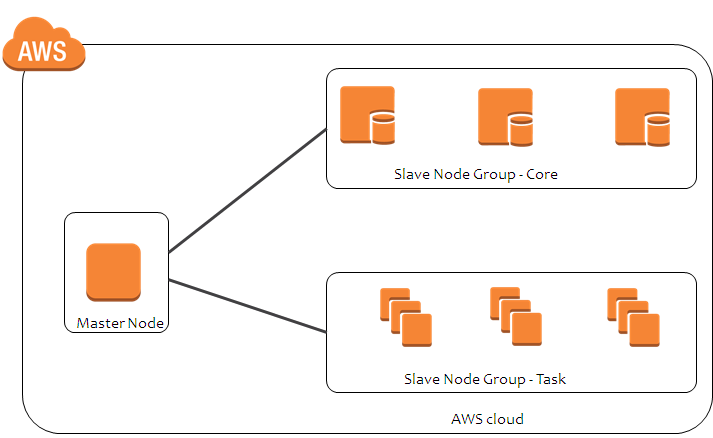

An Amazon EMR cluster consists of Amazon EC2 instances, and a tag added to an Amazon EMR cluster will be propagated to each active Amazon EC2 instance in that cluster You cannot add, edit, or remove tags from terminated clusters or terminated Amazon EC2 instances which were part of an active cluster. Apache Spark and Hadoop on an AWS Cluster with Flintrock Part 4 $ ls hadoop scalademo_211jar spark Submitting with sparksubmit Finally, to actually run our job on our cluster, we must. Hadoop, at it’s version # 1 was a combination of Map/Reduce compute framework and HDFS distributed file system We are now well into version 2 of hadoop and the reality is Map/Reduce is legacy Apache Spark, HBase, Flink and others are.

•coresitexml •hadoopenvsh •yarnsitexml •hdfssitexml •mapredsitexml 8 Set the hadoop config files We need to set the below files in order for hadoop to function properly •Copy and paste the below configurations in coresitexml> go to directory where all the config files are present (cd. Apache™ Hadoop® is an open source software project that can be used to efficiently process large datasets Instead of using one large computer to process and store the data, Hadoop allows clustering commodity hardware together to analyze massive data sets in parallel. This is a step by step guide to install a Hadoop cluster on Amazon EC2 I have my AWS EC2 instance ecapsoutheast1computeamazonawscom ready on which I will install and configure Hadoop, java 17 is already installed In case java is not installed on you AWS EC2 instance, use below commands.

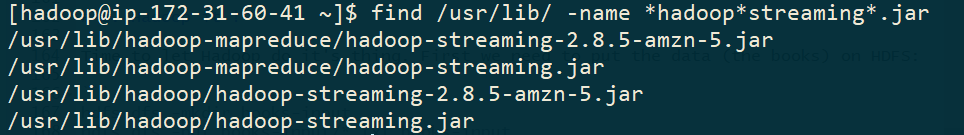

Running on a AWS Hadoop Cluster Please refer to this tutorial for starting a Hadoop cluster on AWS Upload a few books (from Gutenbergorg or some other sites) to HDFS Find the streaming java library. Hadoop on AWS Cluster Starting up Cluster Finished Startup Master node public DNS Upload your jar file to run a job using steps, you can run a job by doing ssh to the master node as well (shown later) Location of jar file on s3 EMR started the master and worker nodes as EC2. Basically, the directory that you are packaging into the jar is confusing the jar file in locating the main class file Instead if trying do the following.

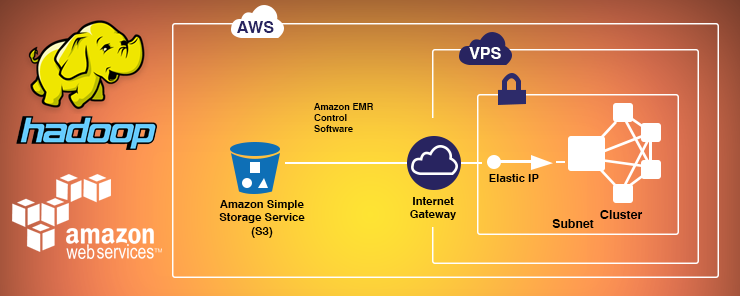

Try our Hadoop cluster;. Amazon Elastic MapReduce (EMR) is a web service using which developers can easily and efficiently process enormous amounts of data It uses a hosted Hadoop framework running on the webscale infrastructure of Amazon EC2 and Amazon S3 Amazon EMR removes most of the cumbersome details of Hadoop, while take care for provisioning of Hadoop, running the job flow, terminating the job flow, moving. Setup & config a Hadoop cluster on these instances;.

Run a JAR file on Amazon EMR which I created using Hadoop 2 7 5 0 votes I found that Amazon EMR has changed it's hadoop version from 273 to 2 and there is no option for 275. Bin/hadoop jar hadoopmapredexamples*jar pi 10 Another example, using some actual data Use an existing key pair to SSH into the master node of the Amazon EC2 cluster as the user "hadoop"\rOtherwise you can proceed without an EC2 key pair. The problem is that S3AFileSystemcreate() looks for SemaphoredDelegatingExecutor(comgooglecommonutilconcurrentListeningExecutorService) which does not exist in hadoopclientapi311jar What does exist is SemaphoredDelegatingExecutor(orgapachehadoopshadedcomgooglecommonutilconcurrentListeningExecutorService) To work around this issue I created a version of hadoopaws311.

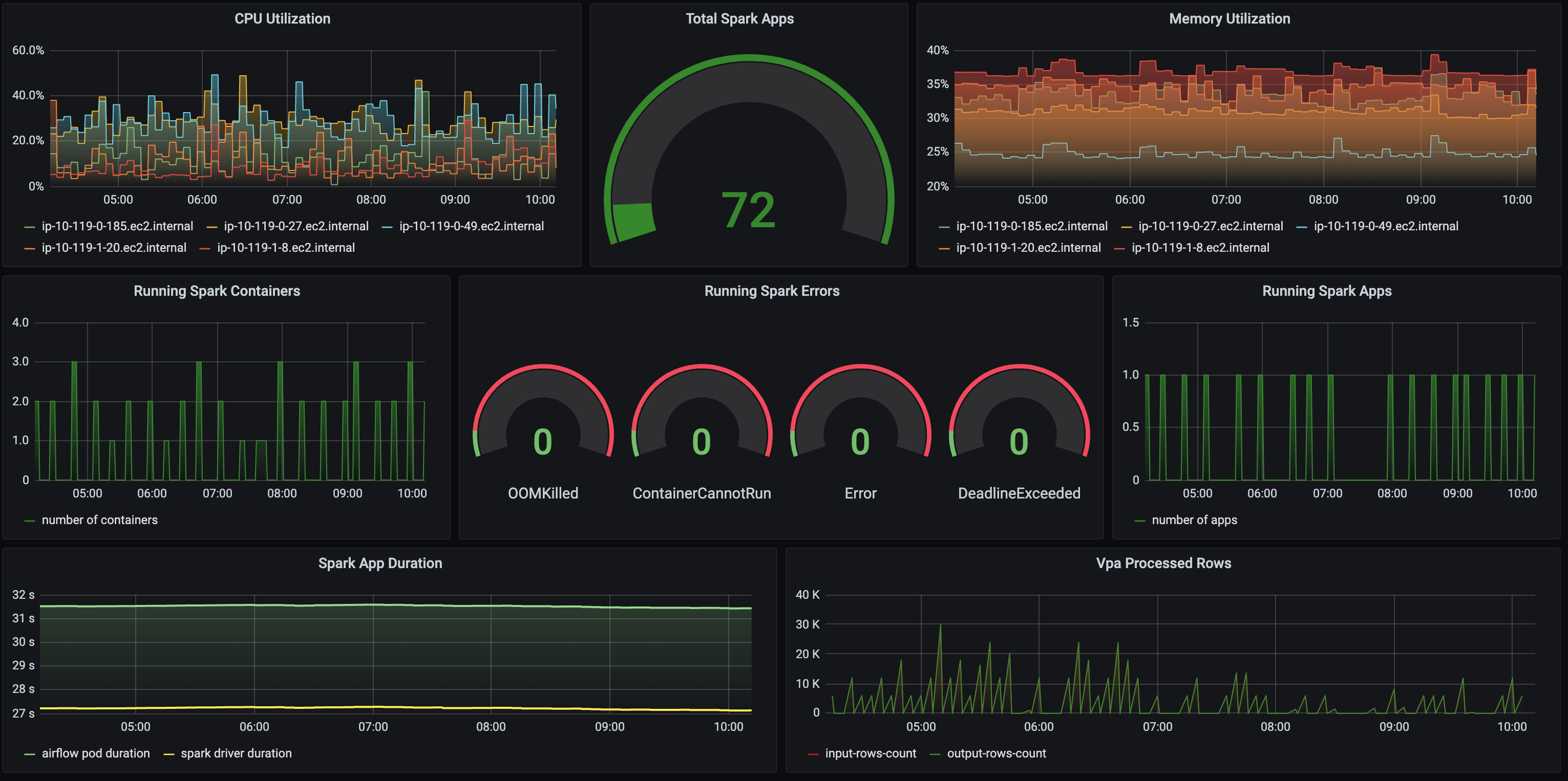

HADOOPCLI is an interactive command line shell that makes interacting with the Hadoop Distribted Filesystem (HDFS) simpler and more intuitive than the standard commandline tools that come with Hadoop If you're familiar with OS X, Linux, or even Windows terminal/consolebased applications, then you are likely familiar with features such as tab completion, command history, and ANSI formatting. Automating Hadoop Computations on AWS Today, we will cover a solution for automating Big Data (Hadoop) computations And, to show it in action, I'm going to provide an example using open dataset. Some organizations are leveraging S3 from Amazon Web Services (AWS) so that they can use data easily via other compute environments such as Hadoop, RDBMS, or take your pick of an EC2 services to.

MapReduce (with HDFS Path) hadoop jar WordCountjar WordCount /analytics/aws/input/resultcsv /analytics/aws/output/1 MapReduce (with S3 Path) hadoop jar WordCount. Hadoop on AWS Cluster Starting up Cluster Finished Startup Master node public DNS Upload your jar file to run a job using steps, you can run a job by doing ssh to the master node as well (shown later) Location of jar file on s3 EMR started the master and worker nodes as EC2. This is a step by step guide to install a Hadoop cluster on Amazon EC2 I have my AWS EC2 instance ecapsoutheast1computeamazonawscom ready on which I will install and configure Hadoop, java 17 is already installed In case java is not installed on you AWS EC2 instance, use below commands.

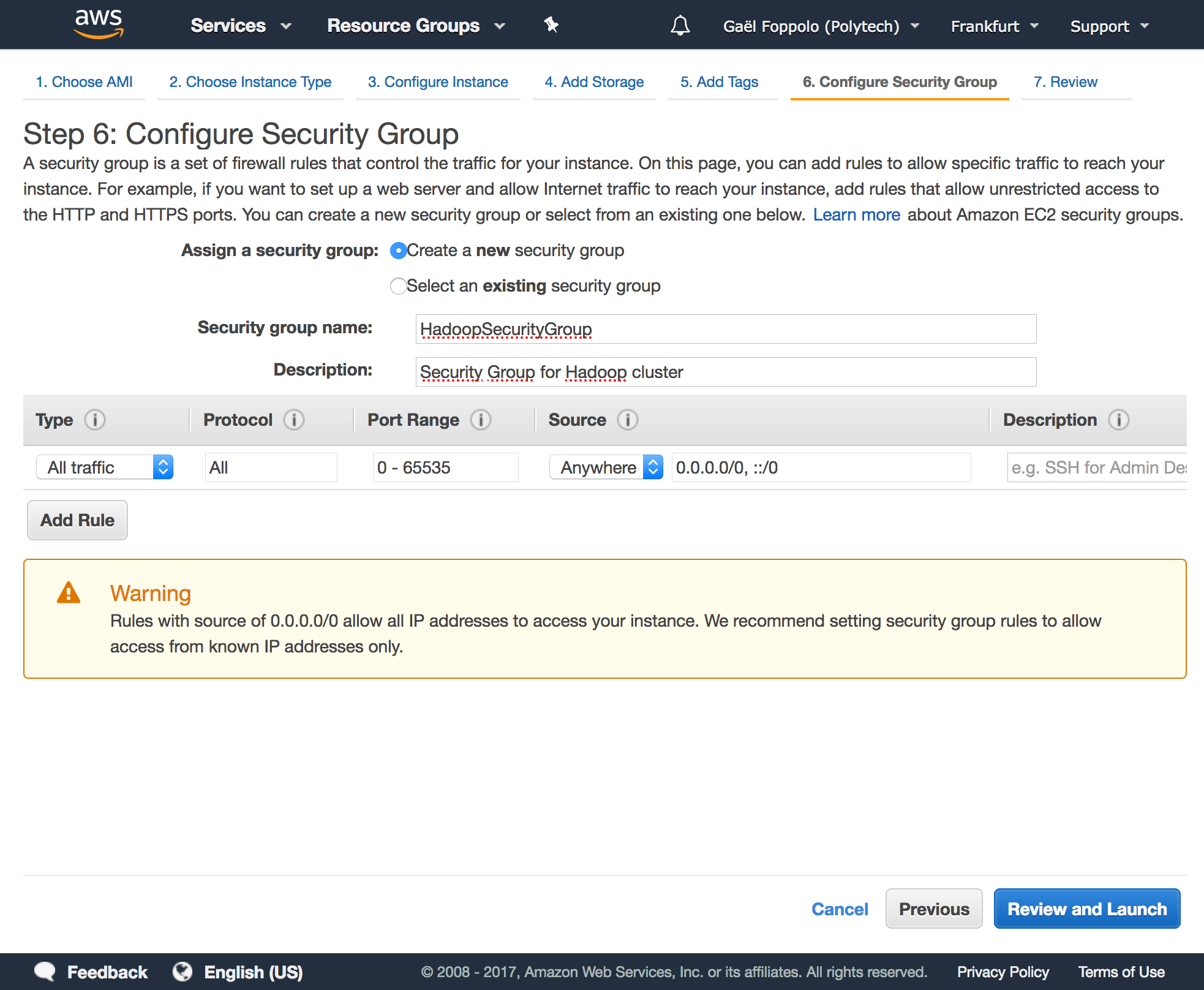

It will pull in a compatible awssdk JAR The hadoopaws JAR does not declare any dependencies other than that dependencies unique to it, the AWS SDK JAR This is simplify excluding/tuning Hadoop dependency JARs in downstream applications. ️ Setup AWS instance We are going to create an EC2 instance using the latest Ubuntu Server as OS After logging on AWS, go to AWS Console, choose the EC2 service On the EC2 Dashboard, click on Launch Instance. •A collection of services that together make up a cloud computing platform.

Hello, We thought that it might be helpful to post a write up on how to use DistributedCache on Amazon Elastic MapReduce DistributedCache is a mechanism that hadoop provides to distribute application specific, readonly files across all instances of the cluster The DistributedCache can help serve the following purposes Setup your hadoop application specific binaries If your hadoop. MapReduce (with HDFS Path) hadoop jar WordCountjar WordCount /analytics/aws/input/resultcsv /analytics/aws/output/1 MapReduce (with S3 Path) hadoop jar WordCount. Apache Spark and Hadoop on an AWS Cluster with Flintrock Part 4 $ ls hadoop scalademo_211jar spark Submitting with sparksubmit Finally, to actually run our job on our cluster, we must.

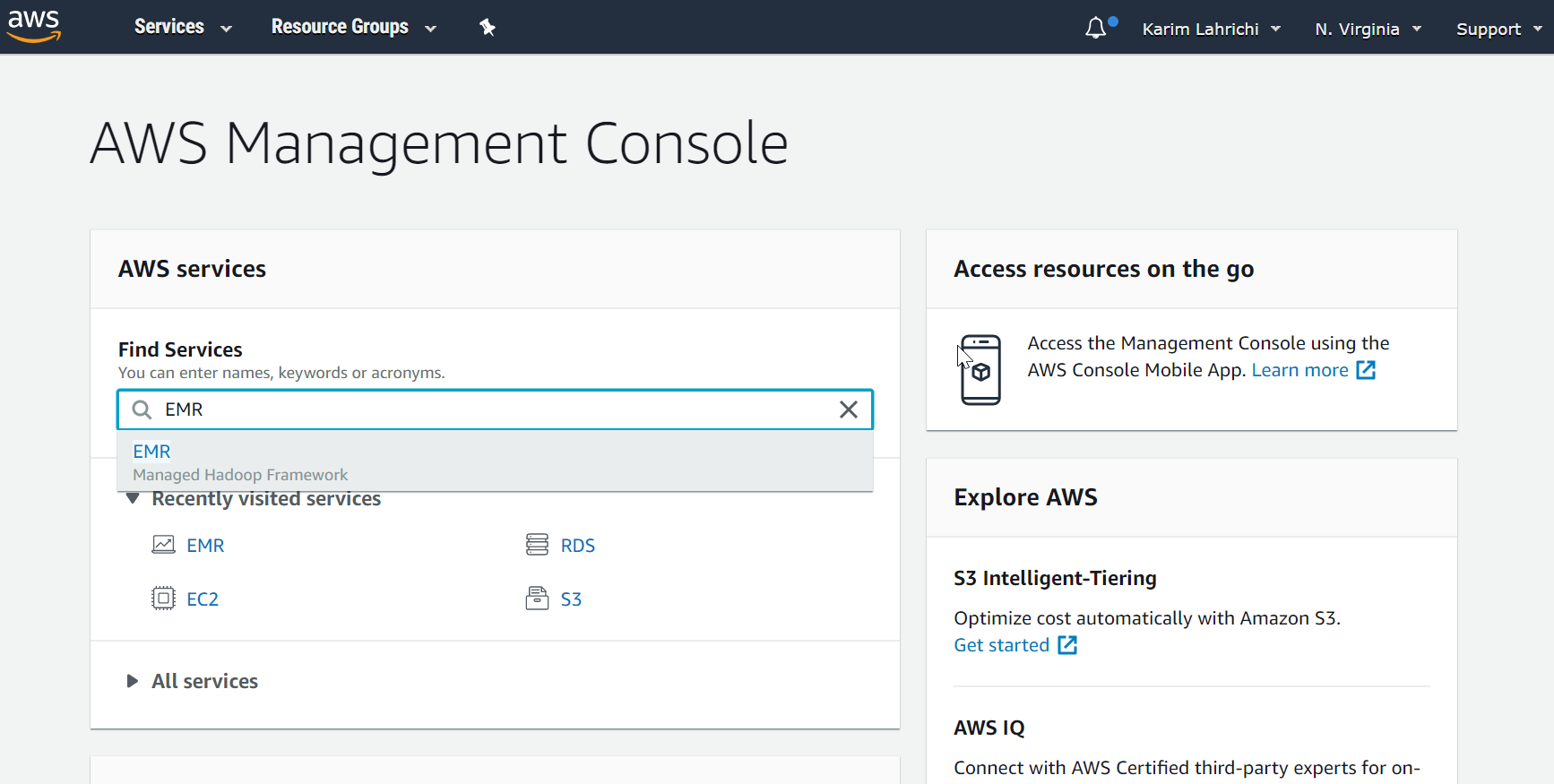

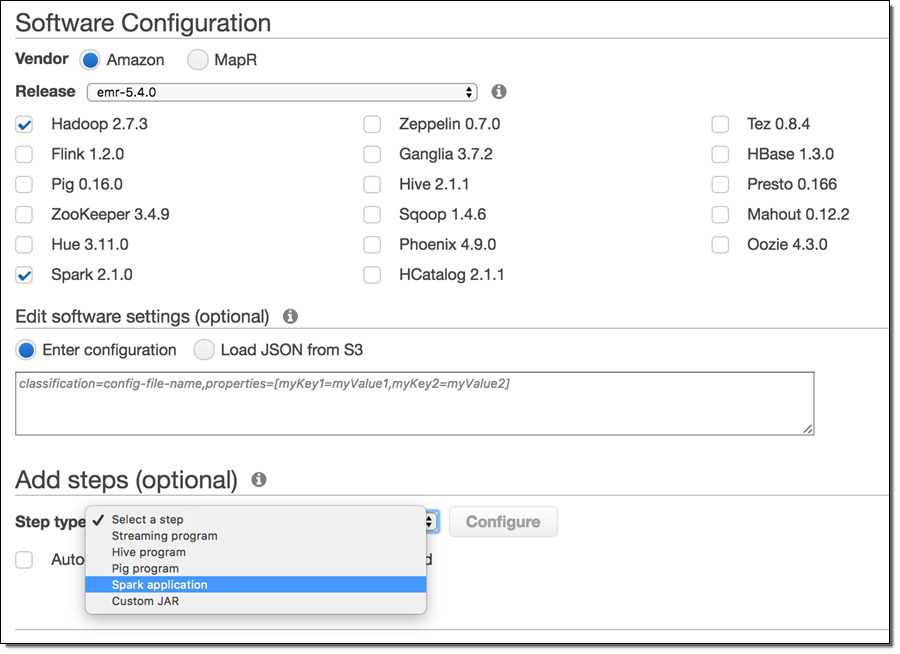

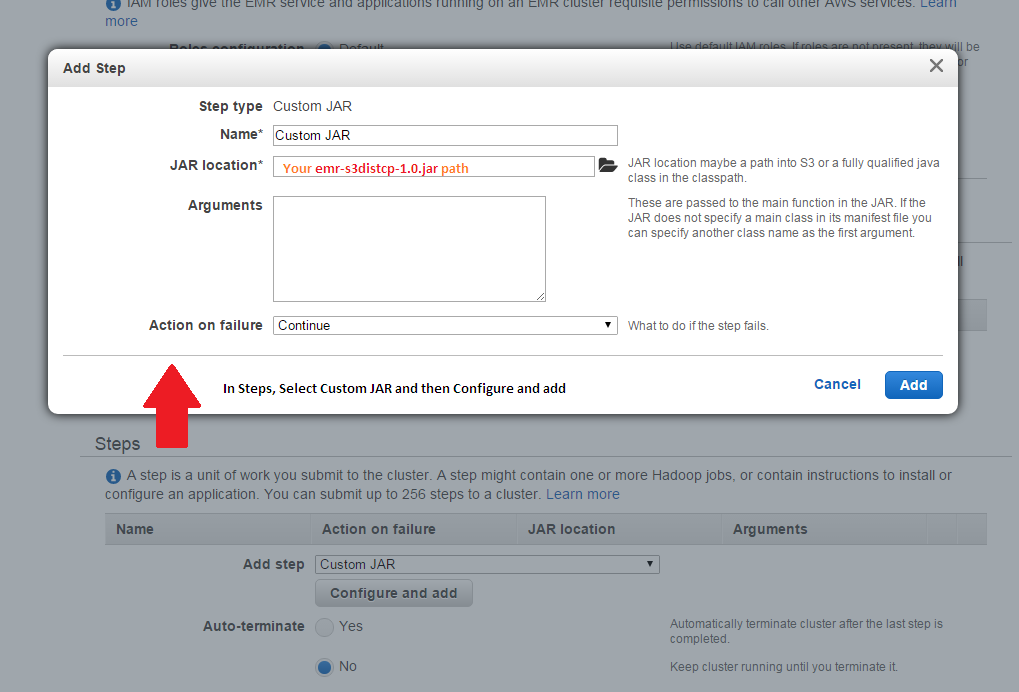

Competing with AWS In 12 I worked on a Hadoop implementation with 25 other contractors Some of my colleagues came from Google, others went on to work for Cloudera There was a significant budget involved, very little of the Hadoop ecosystem was turnkey and a lot of billable hours were produced by the team. Hadoop AWS First select the services and click on EMR from Analytics Then click on the add cluster Fill the Details of Cluster Cluster name as Ananthapurjntu Here we are checking the Logging Browse the s3 folder with the amar17/feb Launch mode should be Step Extension After that select step type as custom Read more. EMR is one of the most uptodate Hadoop distributions Uses Apache Big Top to package Hadoop applications and EMRprovided components in a fast and reliable manner EMR50 which came out on August 2 combined the very latest versions of Hadoop and other distributed compute applications including Spark , Hadoop 272, Hive 21, etc.

The hadoopaws JAR has to match your hadoop version exactly, so that one is fine Determining the Right Version Check your hadoop version Get the hadoopawsjar with the same exact version Go to the maven central page for the correct version of the hadoopawsjar and look at its compile dependencies. Amazon Elastic MapReduce (EMR) is a web service using which developers can easily and efficiently process enormous amounts of data It uses a hosted Hadoop framework running on the webscale infrastructure of Amazon EC2 and Amazon S3 Amazon EMR removes most of the cumbersome details of Hadoop, while take care for provisioning of Hadoop, running the job flow, terminating the job flow, moving. The problem is that S3AFileSystemcreate() looks for SemaphoredDelegatingExecutor(comgooglecommonutilconcurrentListeningExecutorService) which does not exist in hadoopclientapi311jar What does exist is SemaphoredDelegatingExecutor(orgapachehadoopshadedcomgooglecommonutilconcurrentListeningExecutorService) To work around this issue I created a version of hadoopaws311.

Setup & config instances on AWS;. Hadoop AWS First select the services and click on EMR from Analytics Then click on the add cluster Fill the Details of Cluster Cluster name as Ananthapurjntu Here we are checking the Logging Browse the s3 folder with the amar17/feb Launch mode should be Step Extension After that select step type as custom Read more. The default value for S3_BUCKET (hadoopec2images) is for public imagesImages for Hadoop version 0171 and later are in the hadoopimages bucket, so you should change this variable if you want to use one of these images You also need to change this if you want to use a private image you have built yourself.

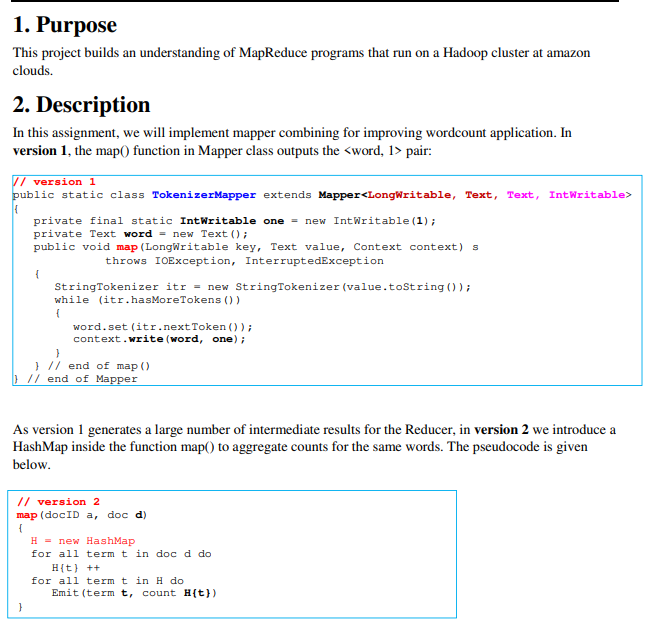

Most of the sample codes on web are for older versions of Hadoop Word Count Mapper Part 2 Amazon Web Services (AWS) What is AWS?. HADOOP remove jackson, joda and other transient aws SDK dependencies from hadoopaws Resolved HADOOP NetworkBinding has a runtime class dependency on a thirdparty shaded class. Amazon EMR (Elastic MapReduce) allows developers to avoid some of the burdens of setting up and administrating Hadoop tasks Learn how to optimize it Apache Hadoop is an open source framework designed to distribute the storage and processing of massive data sets across virtually limitless servers.

HADOOP remove jackson, joda and other transient aws SDK dependencies from hadoopaws Resolved HADOOP NetworkBinding has a runtime class dependency on a thirdparty shaded class. Hadoop 3 was released in December 17 It's a major release with a number of interesting new features It's early days but I've found so far in my testing it hasn't broken too many of the features or processes I commonly use day to day in my 2x installations. Most of the sample codes on web are for older versions of Hadoop Word Count Mapper Part 2 Amazon Web Services (AWS) What is AWS?.

Hadoop on AWS Cluster Starting up Cluster Finished Startup Master node public DNS Upload your jar file to run a job using steps, you can run a job by doing ssh to the master node as well (shown later) Location of jar file on s3 EMR started the master and worker nodes as EC2.

Analyze Your Data On Amazon Dynamodb With Apache Spark Aws Big Data Blog

Cloudera Enterprise Reference Architecture For Aws Deployments 5 15 X Cloudera Documentation

Apache Spark And The Hadoop Ecosystem On Aws

Aws Hadoop Jar のギャラリー

Configuring Your First Hadoop Cluster On Ec2

Implement Perimeter Security In Amazon Emr Using Apache Knox Aws Big Data Blog

D1 Awsstatic Com Whitepapers Amazon Emr Migration Guide Pdf

Running Pagerank Hadoop Job On Aws Elastic Mapreduce The Pragmatic Integrator

Modern Data Lake With Minio Part 2

Converting Google Analytics Json To S3 On Aws Sonra

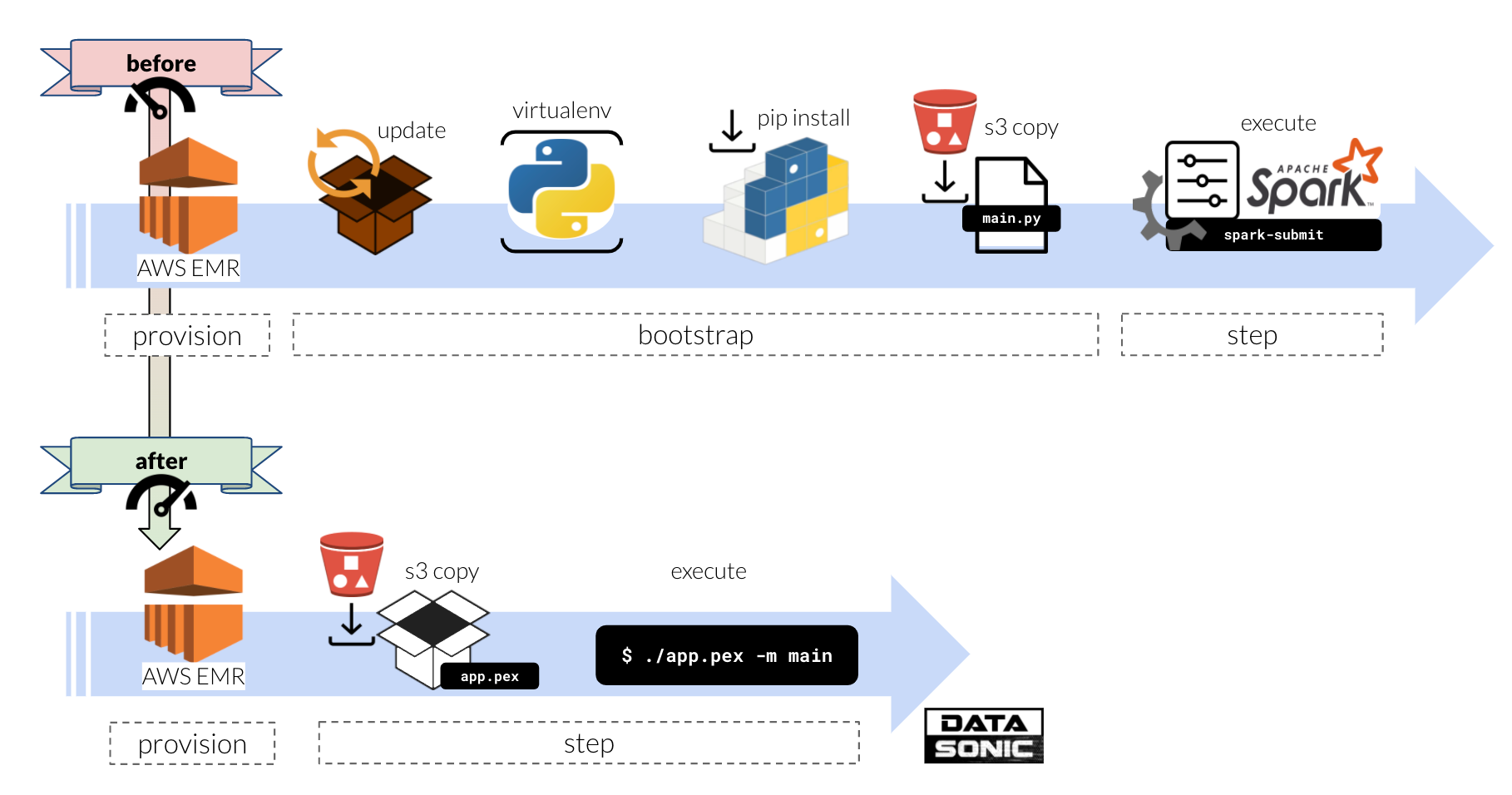

Pex The Secret Sauce For The Perfect Pyspark Deployment Of Aws Emr Workloads By Jan Teichmann Towards Data Science

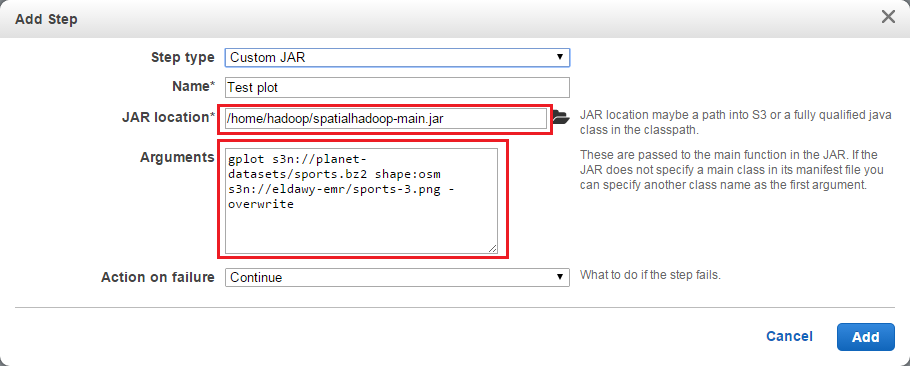

Spatialhadoop

Emr 4 2 0 Error During Running Of Custom Jar Command Runner Stack Overflow

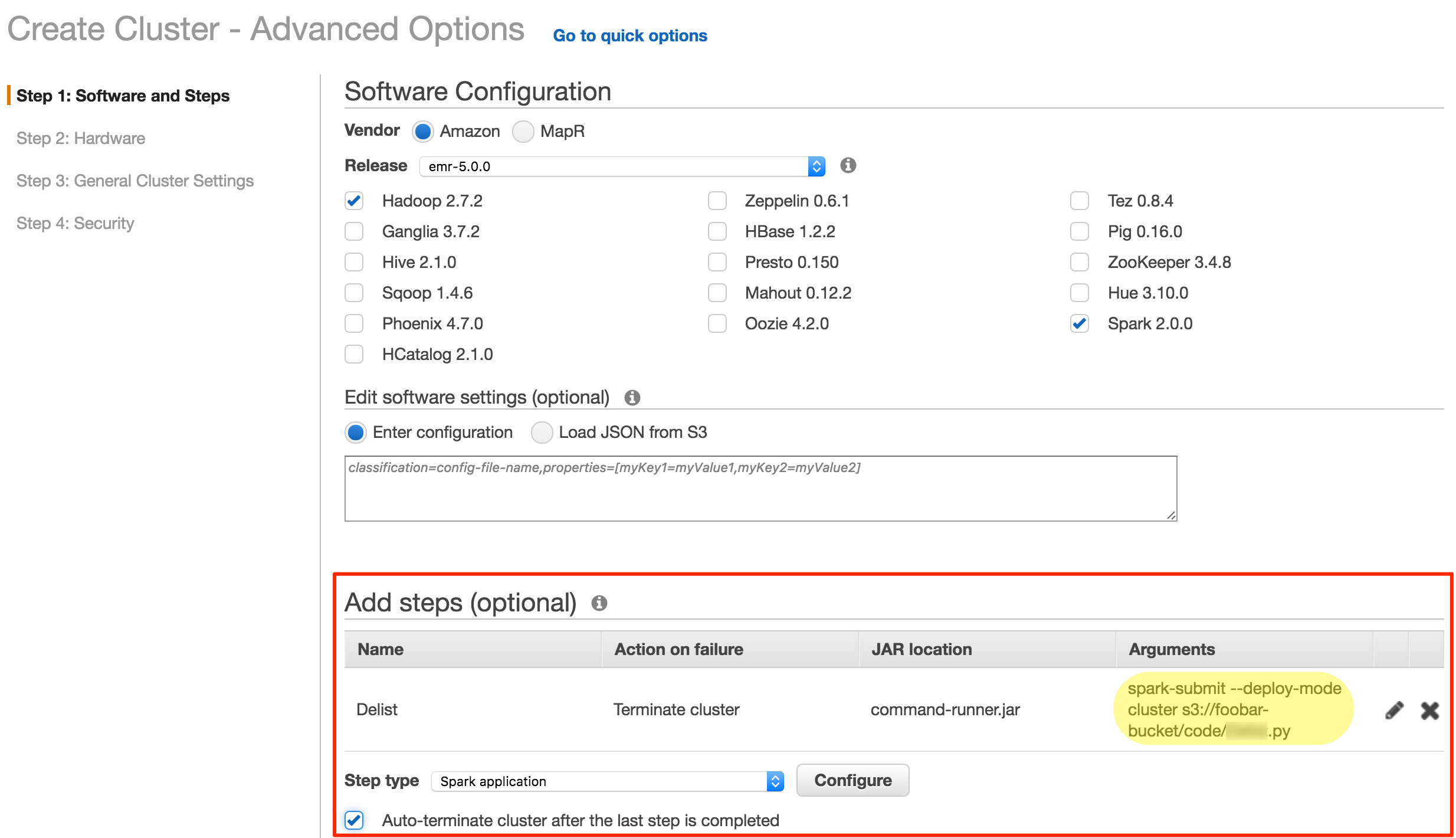

Etl Offload With Spark And Amazon Emr Part 3 Running Pyspark On Emr

Setup An Emr Cluster Via Aws Cli Standard Deviations

Tune Hadoop And Spark Performance With Dr Elephant And Sparklens On Amazon Emr Aws Big Data Blog

Analyzing Big Data With Spark And Amazon Emr Blog

1

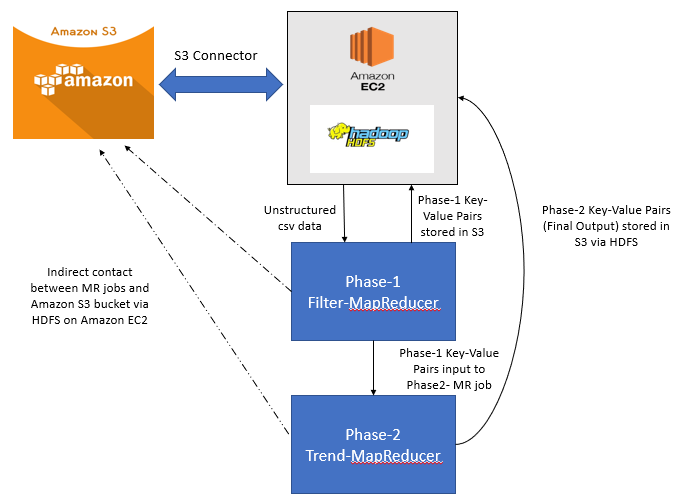

Map Reduce With Python And Hadoop On Aws Emr By Chiefhustler Level Up Coding

Adding Streaming Job Step On Emr Learning Big Data With Amazon Elastic Mapreduce

Snap Stanford Edu Class Cs341 13 Downloads Amazon Emr Tutorial Pdf

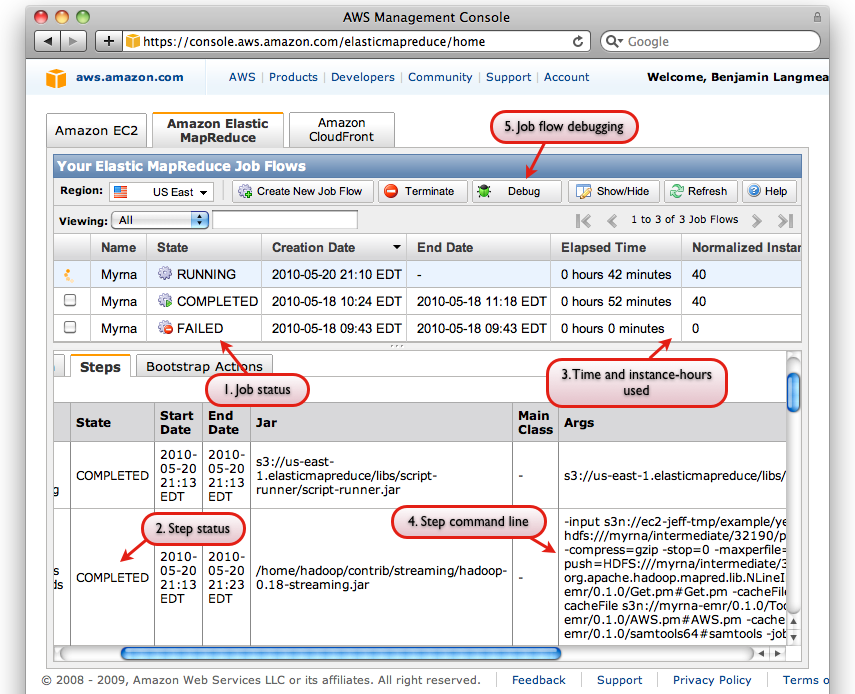

Emr Series 2 Running And Troubleshooting Jobs In An Emr Cluster Loggly

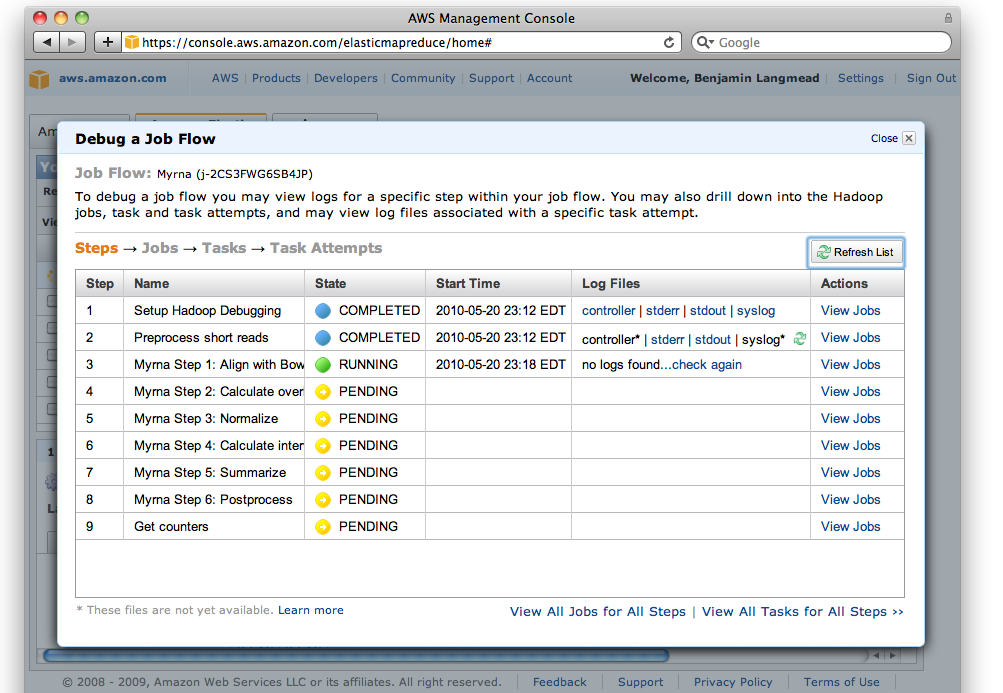

Enhanced Step Debugging Amazon Emr

Aws Lambda Function To Launch Emr With Hadoop Map Reduce Python

Q Tbn And9gcsy Ui Rh2jr2v935milwhh6uwqjdh V3vvwzdedou2c5to37ip Usqp Cau

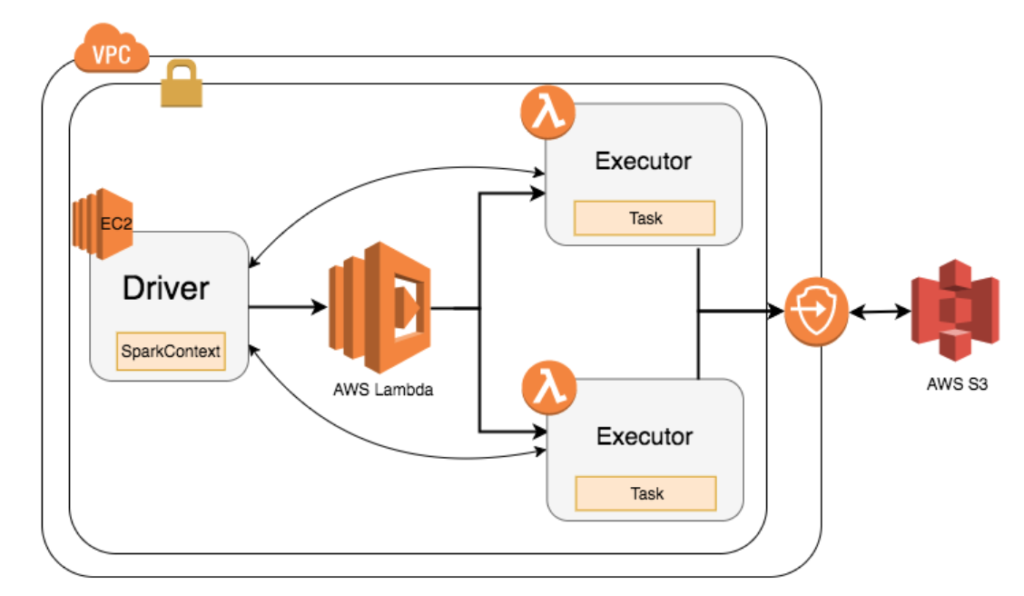

Qubole Announces Apache Spark On Aws Lambda

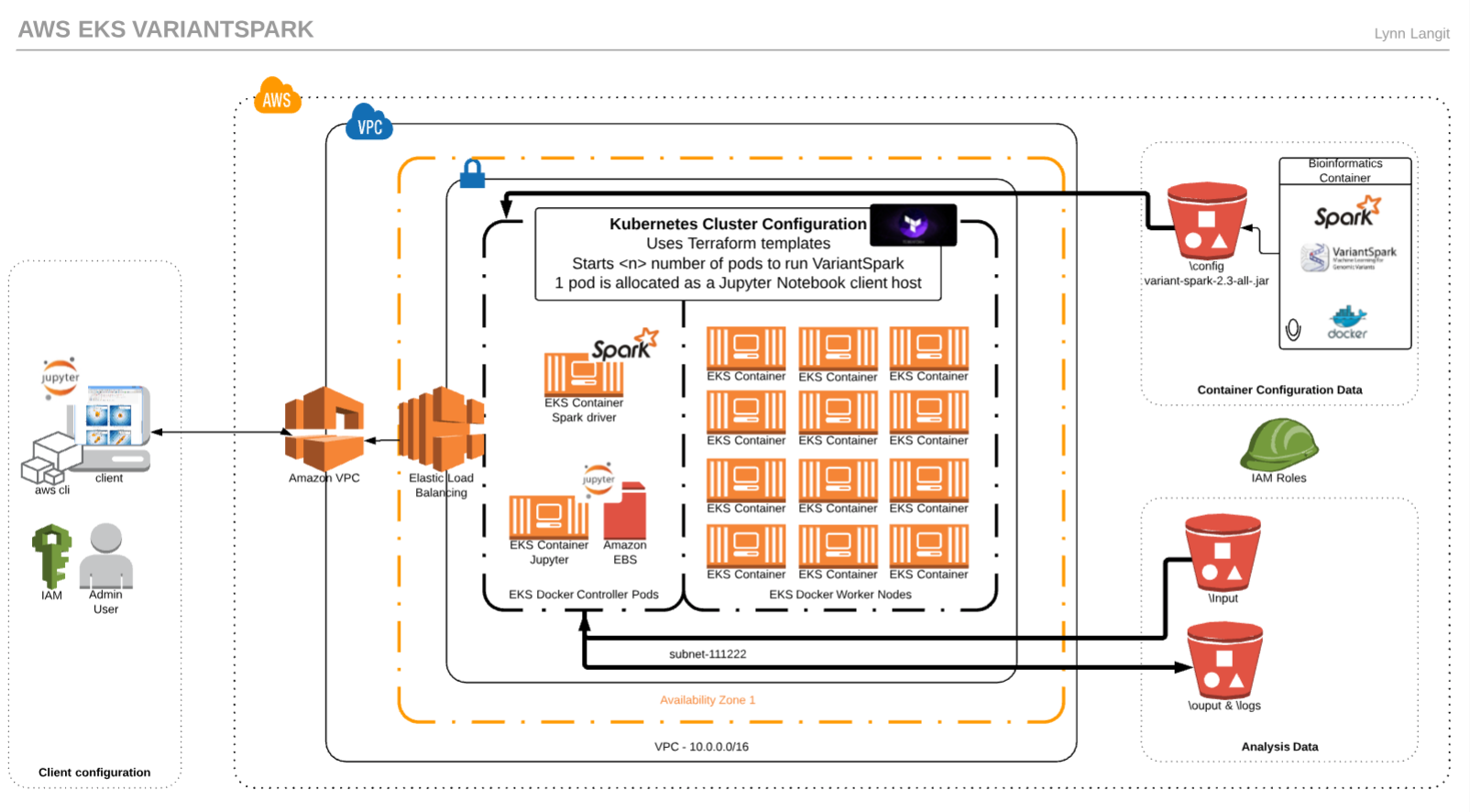

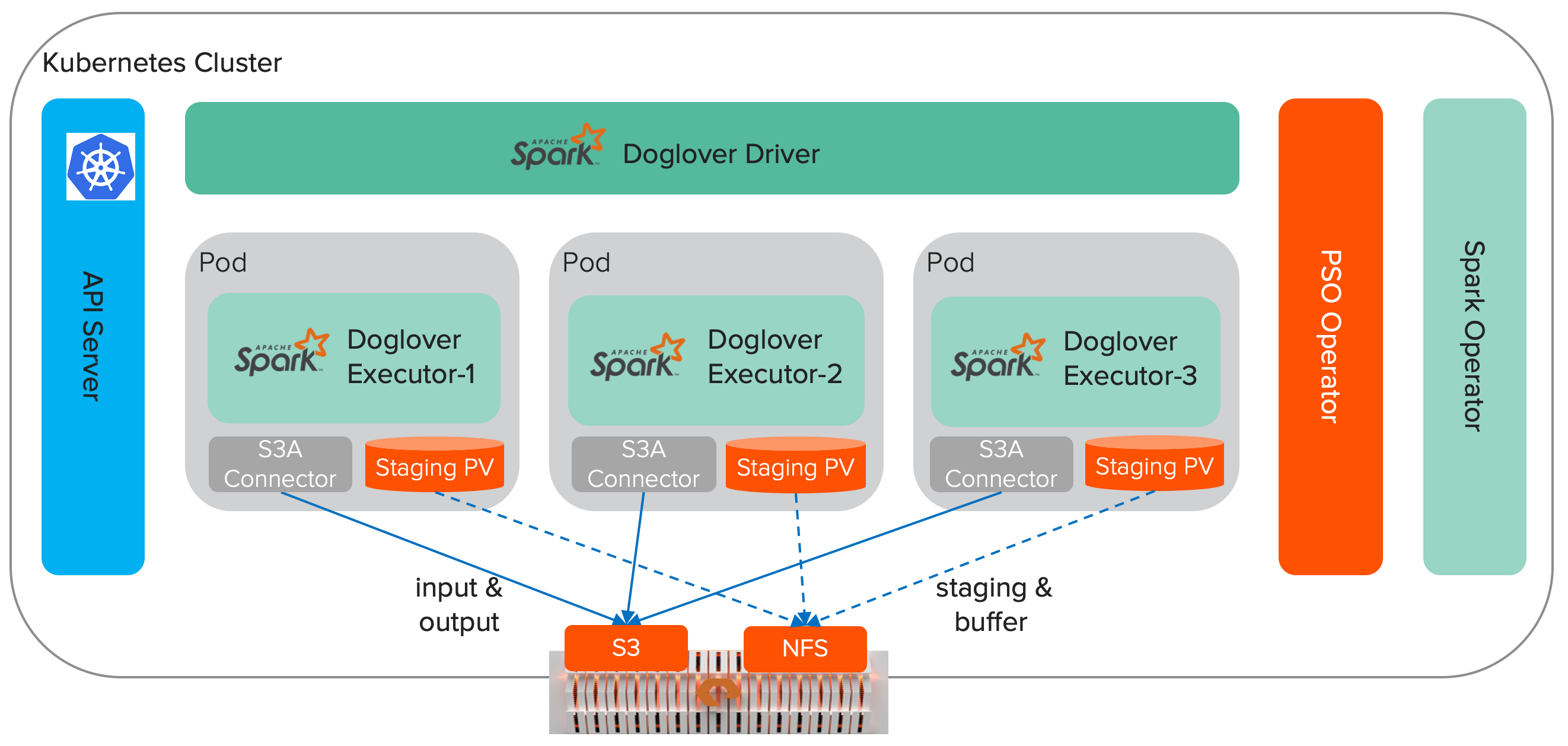

Scaling Custom Machine Learning On Aws Part 3 Kubernetes By Lynn Langit Medium

Hadoop Tutorial 3 1 Using Amazon S Wordcount Program Dftwiki

Big Data And Cloud Tips Debugging A Hadoop Mapreduce Program In Eclipse

Set Up Hadoop Multi Nodes Cluster On Aws Ec2 A Working Example Using Python With Hadoop Streaming Filipyoo

Running Pagerank Hadoop Job On Aws Elastic Mapreduce The Pragmatic Integrator

Let S Try Hadoop On Aws A Simple Hadoop Cluster With 4 Nodes A By Gael Foppolo Gael Foppolo

Determine Compatibility Of Hadoop Aws And Aws Java Sdk Bundle Jars Coding Stream Of Consciousness

Custom Datasource Plugin On Emr Throwing Java Lang Noclassdeffounderror Scalaj Http Http Stack Overflow

Connecting Glue Catalog To Domino For Use With On Demand Spark Domino Community

Running A Custom Java Jar On An Aws Emr Cluster Part 3 3 Youtube

Using Aws Systems Manager Run Command To Submit Spark Hadoop Jobs On Amazon Emr Aws Management Governance Blog

Solved I Run A Hadoop Job But It Got Stucked And Nothing Cloudera Community

Try Hadoop In 5 Minutes Hadoop In Real World

Map Reduce With Python And Hadoop On Aws Emr By Chiefhustler Level Up Coding

How To Integrate Amazon S3 With Denodo Distributed File System Custom Wrapper

Aws Markobigdata

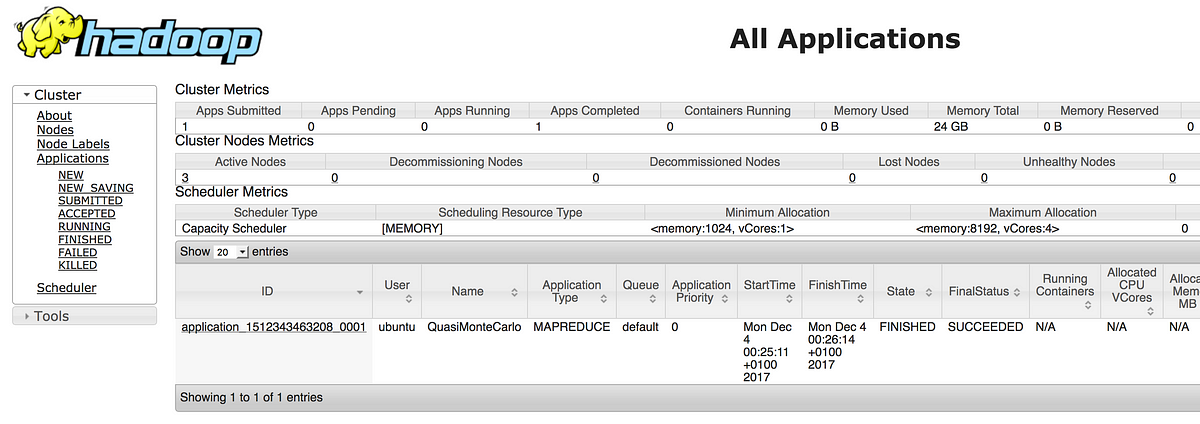

Running A Mapreduce Program On Amazon Ec2 Hadoop Cluster With Yarn Eduonix Blog

Using Spark Sql For Etl Aws Big Data Blog

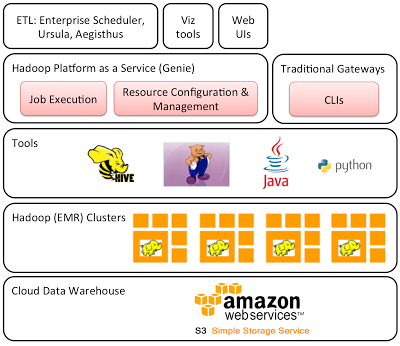

Hadoop Platform As A Service In The Cloud By Netflix Technology Blog Netflix Techblog

1

Apache Spark And The Hadoop Ecosystem On Aws

Map Reduce With Python And Hadoop On Aws Emr By Chiefhustler Level Up Coding

Simply Install Apache Hadoop Hadoop Is One Of The Most Mature And By Hoa Nguyen Insight

Running Pagerank Hadoop Job On Aws Elastic Mapreduce The Pragmatic Integrator

Best Practices For Securing Amazon Emr Aws Big Data Blog

Set Up Hadoop Multi Nodes Cluster On Aws Ec2 A Working Example Using Python With Hadoop Streaming Filipyoo

Build A Healthcare Data Warehouse Using Amazon Emr Amazon Redshift Aws Lambda And Omop Aws Big Data Blog

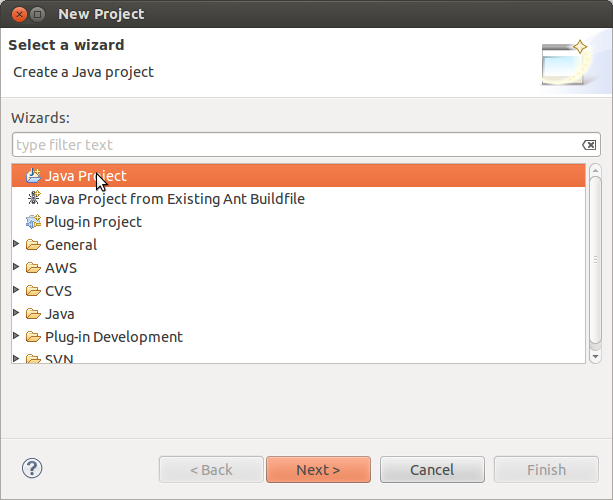

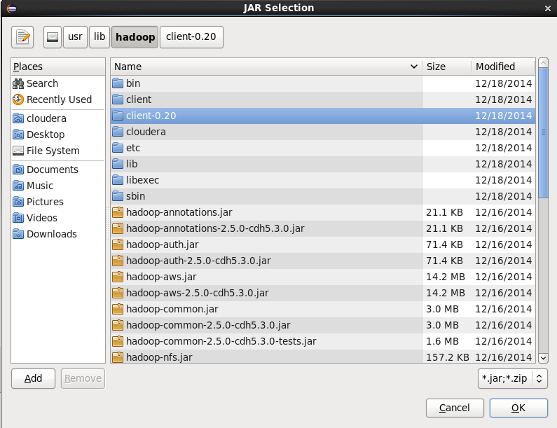

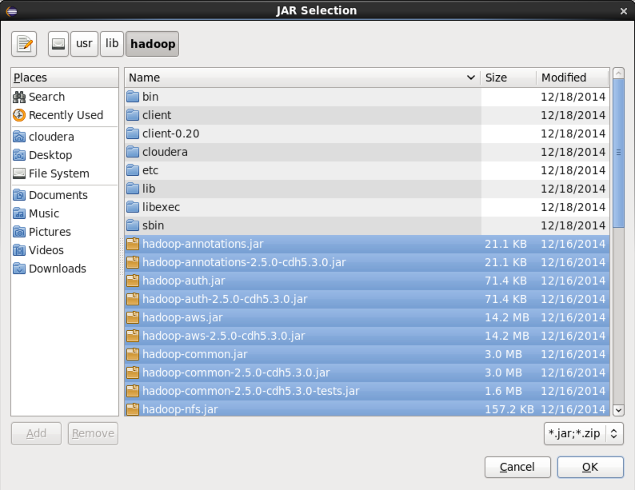

Apache Hadoop Creating Card Java Project With Eclipse Using Cloudera Vm Unoexample For Cdh5

Deploying Etl On Amazon Emr Fingo Blog

Www Netapp Com Media Tr 4529 Pdf

Running Apache Spark And S3 Locally

Myrna Manual

Using Aws Systems Manager Run Command To Submit Spark Hadoop Jobs On Amazon Emr Aws Management Governance Blog

Aws Data Pipeline With Hive

Tips For Migrating To Apache Hbase On Amazon S3 From Hdfs Aws Big Data Blog

Q Tbn And9gcsruzccpu Mdigc3nrzq Qrzvc8xf6wgj1cs2z1janlrptq9bez Usqp Cau

Apache Hadoop Creating Wordcount Java Project With Eclipse

Relationship Of Hadoop And Qlikview Qlikview Integration With Hadoop Dataflair

Myrna Manual

Hadoop Tutorial 2 3 Running Wordcount In Python On Aws Dftwiki

Installing Oracle Data Integrator Odi On Amazon Elastic Mapreduce Emr A Team Chronicles

Metadata Classification Lineage And Discovery Using Apache Atlas On Amazon Emr Aws Big Data Blog

A Step By Step Guide To Install Hadoop Cluster On Amazon Ec2 Eduonix Blog

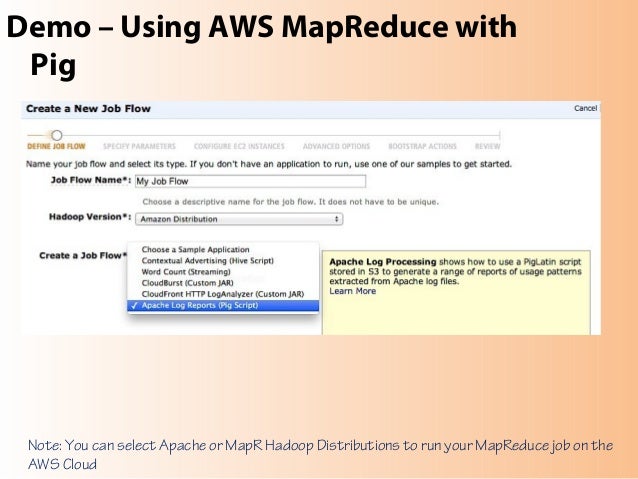

Emr Training Amazon Web Services

Hadoop 101 Multi Node Installation Using Aws Ec2 Codethief

New Apache Spark On Amazon Emr Aws News Blog

Encrypt Data In Transit Using A Tls Custom Certificate Provider With Amazon Emr Aws Big Data Blog

Tune Hadoop And Spark Performance With Dr Elephant And Sparklens On Amazon Emr Aws Big Data Blog

Big Data And Cloud Tips April 13

Running Apache Spark And S3 Locally

Scoring Snowflake Data Via Datarobot Models On Aws Emr Spark Datarobot Community

Beginner Tips For Elastic Mapreduce Opensource Connections

Migrating Apache Spark Workloads From Aws Emr To Kubernetes By Dima Statz Itnext

Wordcount On Amazon Aws Hadoop Cluster Youtube

Set Up Spark Job Server On An Emr Cluster Francois Botha

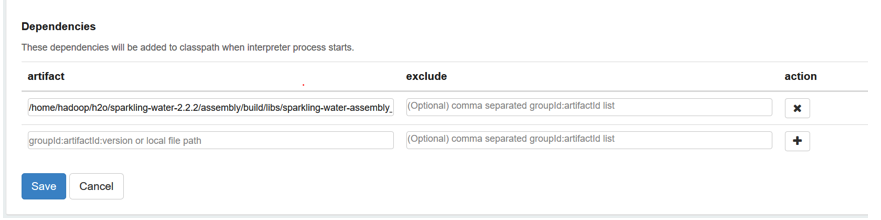

Welcome To H2o 3 H2o 3 32 0 3 Documentation

Amazon Emr Five Ways To Improve The Way You Use Hadoop

Emr Series 2 Running And Troubleshooting Jobs In An Emr Cluster Loggly

Migrate And Deploy Your Apache Hive Metastore On Amazon Emr Aws Big Data Blog

How To Integrate Amazon S3 With Denodo Distributed File System Custom Wrapper

Running Pagerank Hadoop Job On Aws Elastic Mapreduce The Pragmatic Integrator

Apache Spark With Kubernetes And Fast S3 Access By Yifeng Jiang Towards Data Science

Avishkar Install Hadoop On Centos On Amazon Aws Ec2 Instance

Map Reduce With Amazon Ec2 And S3 By Sanchit Gawde Medium

Hadoop And Amazon Web Services Ken Krugler Hadoop And Aws Overview Ppt Download

Using H2o With Amazon Aws H2o 3 0 10 Documentation

Using Eclipse Maven Build I Need Jar File To Run Chegg Com

Apache Spark And Hadoop On An Aws Cluster With Flintrock Part 4 By Jon C 137 Medium

Let S Try Hadoop On Aws A Simple Hadoop Cluster With 4 Nodes A By Gael Foppolo Gael Foppolo

Www Netapp Com Media Tr 4529 Pdf

Creating Ec2 Instances In Aws To Launch A Hadoop Cluster Hadoop In Real World

Http Docs Cascading Org Tutorials Cascading Aws Part2 Html

Amazon Emr Masterclass

Aws Ec2 Builds Hadoop And Spark Clusters Programmer Sought

Hadoop On Iaas Part 2 Oracle Pat Shuff S Blog

Pyspark Connect To Aws S3a Filesystem By Diary Of A Codelovingyogi Medium

Simply Install Apache Hadoop Hadoop Is One Of The Most Mature And By Hoa Nguyen Insight

Amazon Emr Five Ways To Improve The Way You Use Hadoop

Beginner Tips For Elastic Mapreduce Opensource Connections