Aws Hadoop Tutorial

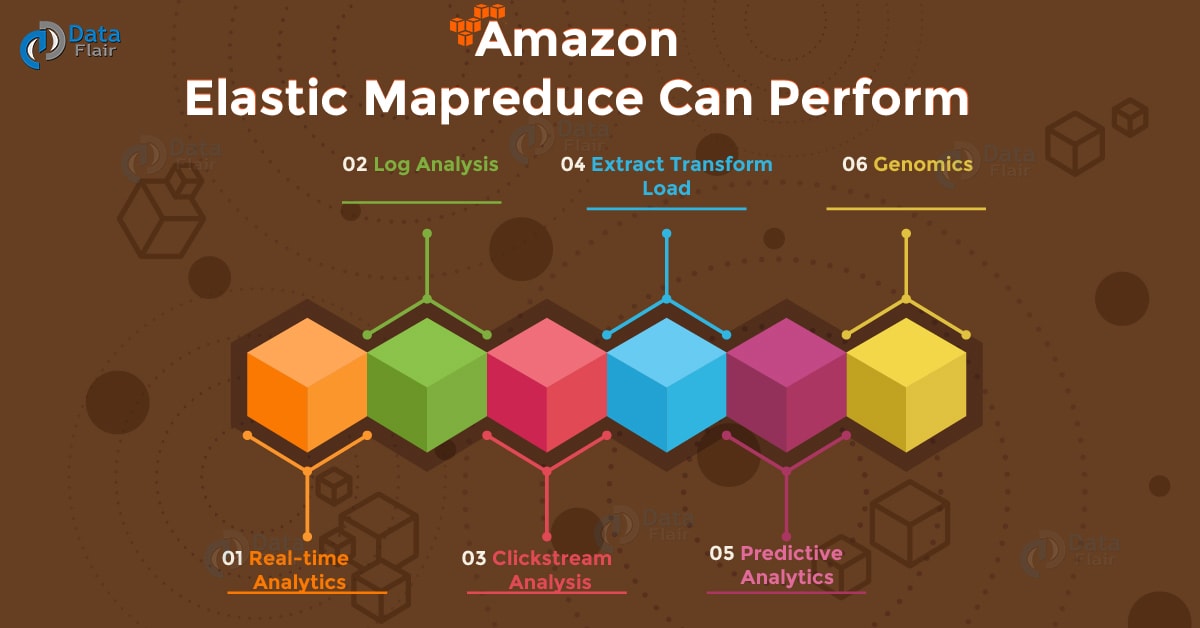

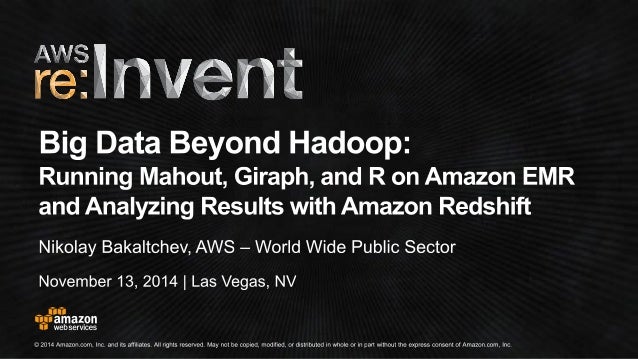

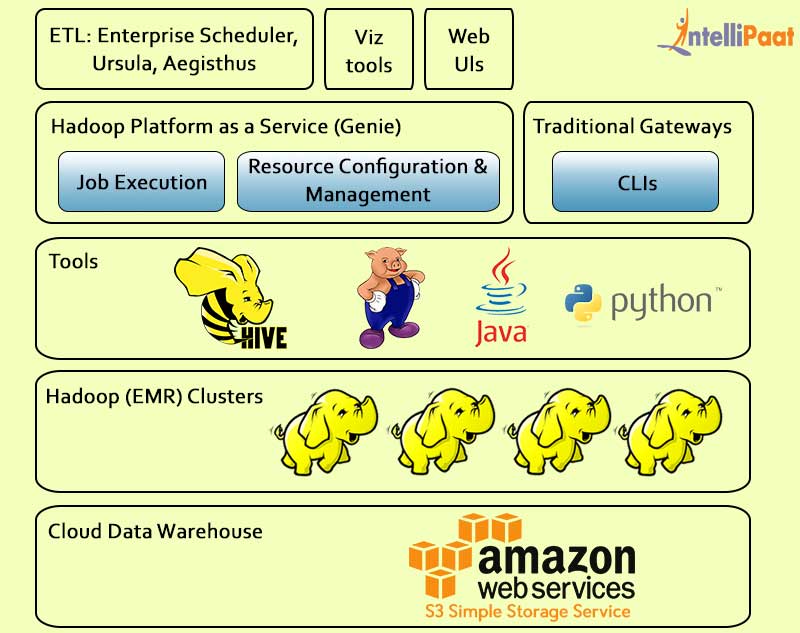

AWS will show you how to run Amazon Elastic MapReduce jobs to process data using the broad ecosystem of Hadoop tools like Pig and Hive Also, AWS will teach you how to create big data environments in the cloud by working with Amazon DynamoDB and Amazon Redshift, understand the benefits of Amazon Kinesis, and leverage best practices to design big data environments for analysis, security, and costeffectiveness.

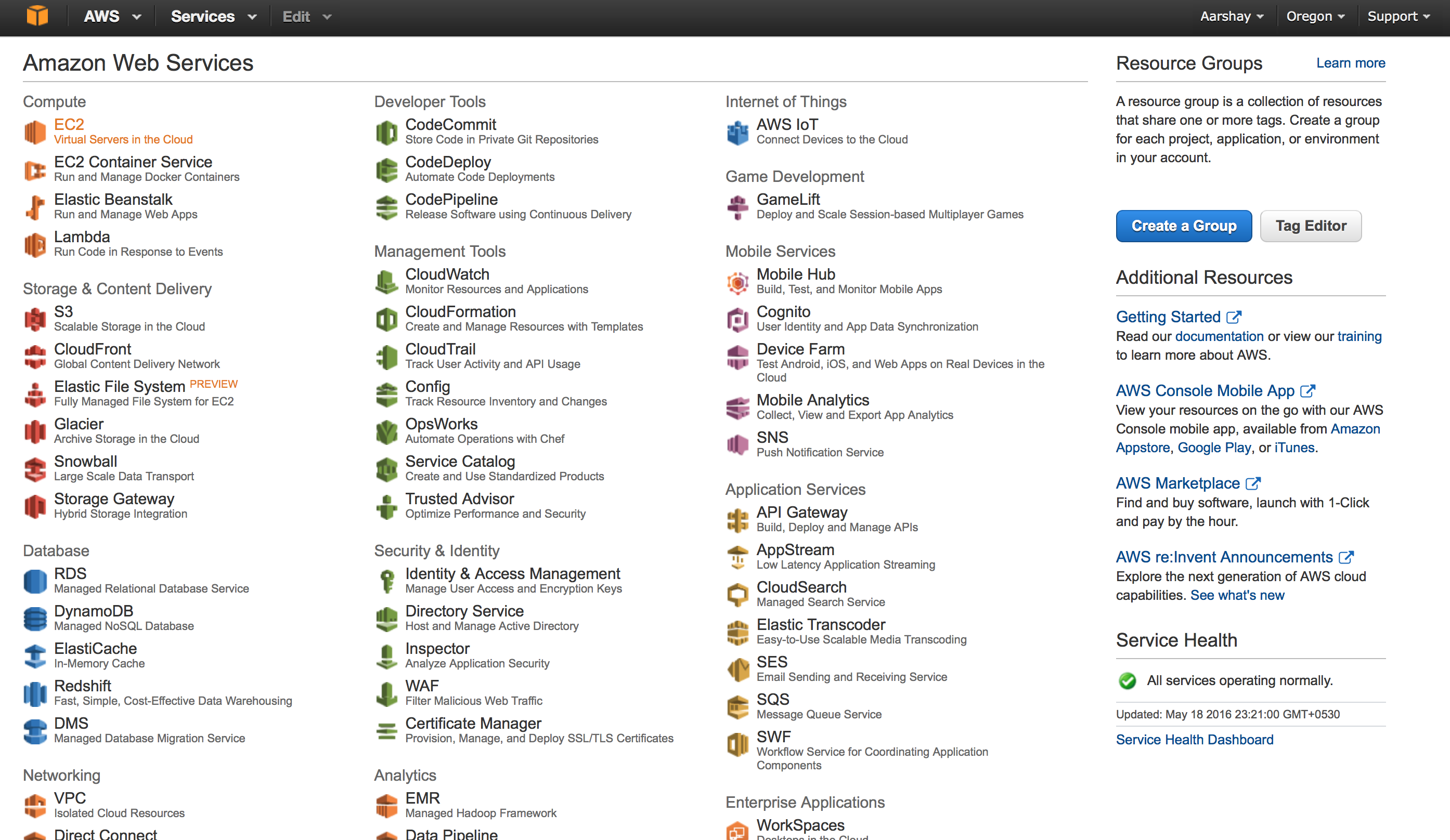

Aws hadoop tutorial. 11 Login to Amazon AWS 111 Click on Sign In to AWS Management Console;. Hadoop is a technology using which you can run big data jobs using a MapReduce program AWS is a mixed bag of multiple services ranging from 1 Virtual servers 2 Virtual storage 3 Virtual network 4 Databases 5 Message queue services like SQS 6. This tutorial will be divided into two parts In the first part we will demonstrate how to set up instances on Amazon Web Services (AWS) AWS is a cloud computing platform that enables us to quickly provision virtual servers In the second part we will demonstrate how to install Hadoop in the four node cluster we created.

AWS tutorial provides basic and advanced concepts Our AWS tutorial is designed for beginners and professionals AWS stands for Amazon Web Services which uses distributed IT infrastructure to provide different IT resources on demand Our AWS tutorial includes all the topics such as introduction, history of aws, global infrastructure, features of aws, IAM, Storage services, Database services, etc. Hey everyone, In this article, I will show you an interesting Automation in which we will Setup the Hadoop Cluster (HDFS) on top of AWS Cloud (EC2) and we will do everything using a tool called. For setting up your own, go to quickstart and select one For preconfigured AMIs you have to select it from AWS marketplace For setting up your own, go to quickstart and select one.

A software engineer gives a tutorial on working with Hadoop clusters an AWS S3 environment, using some Python code to help automate Hadoop's computations Automating Hadoop Computations on AWS. 116 Access Key, Secret Key;. The cloud storage provided by Amazon Web Services is safe, secure and highly durable With AWS you can build applications for colleagues, consumers, enterprises support or ecommerce The applications built with AWS are highly sophisticated and scalable 5 AWS Tutorial – Features Let’s discuss a features Amazon Web Services.

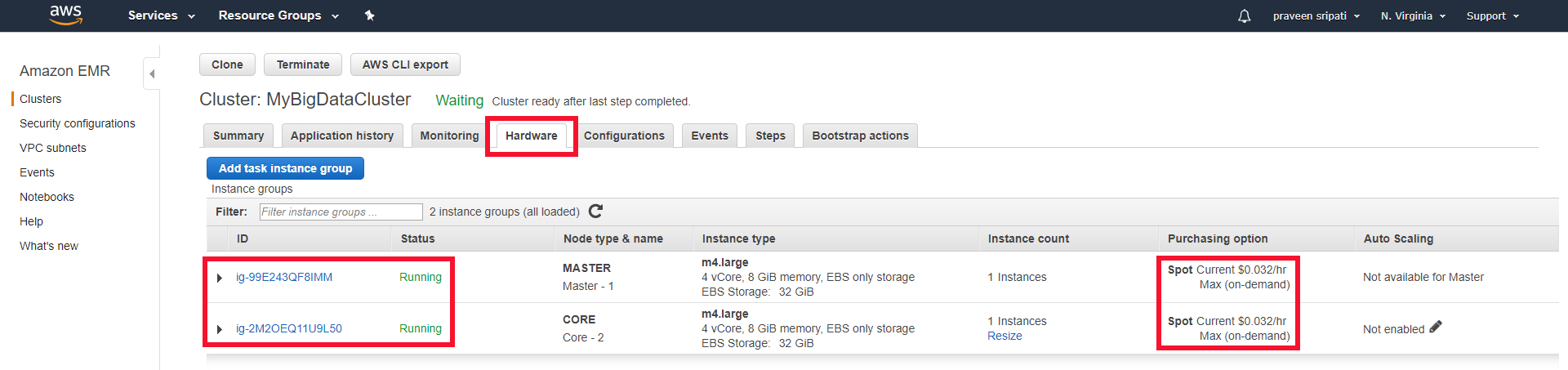

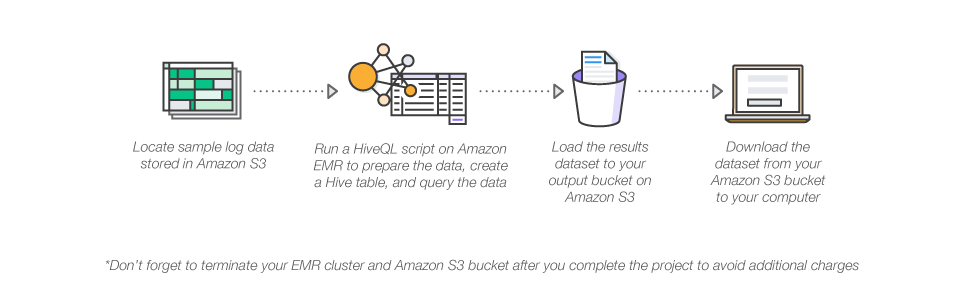

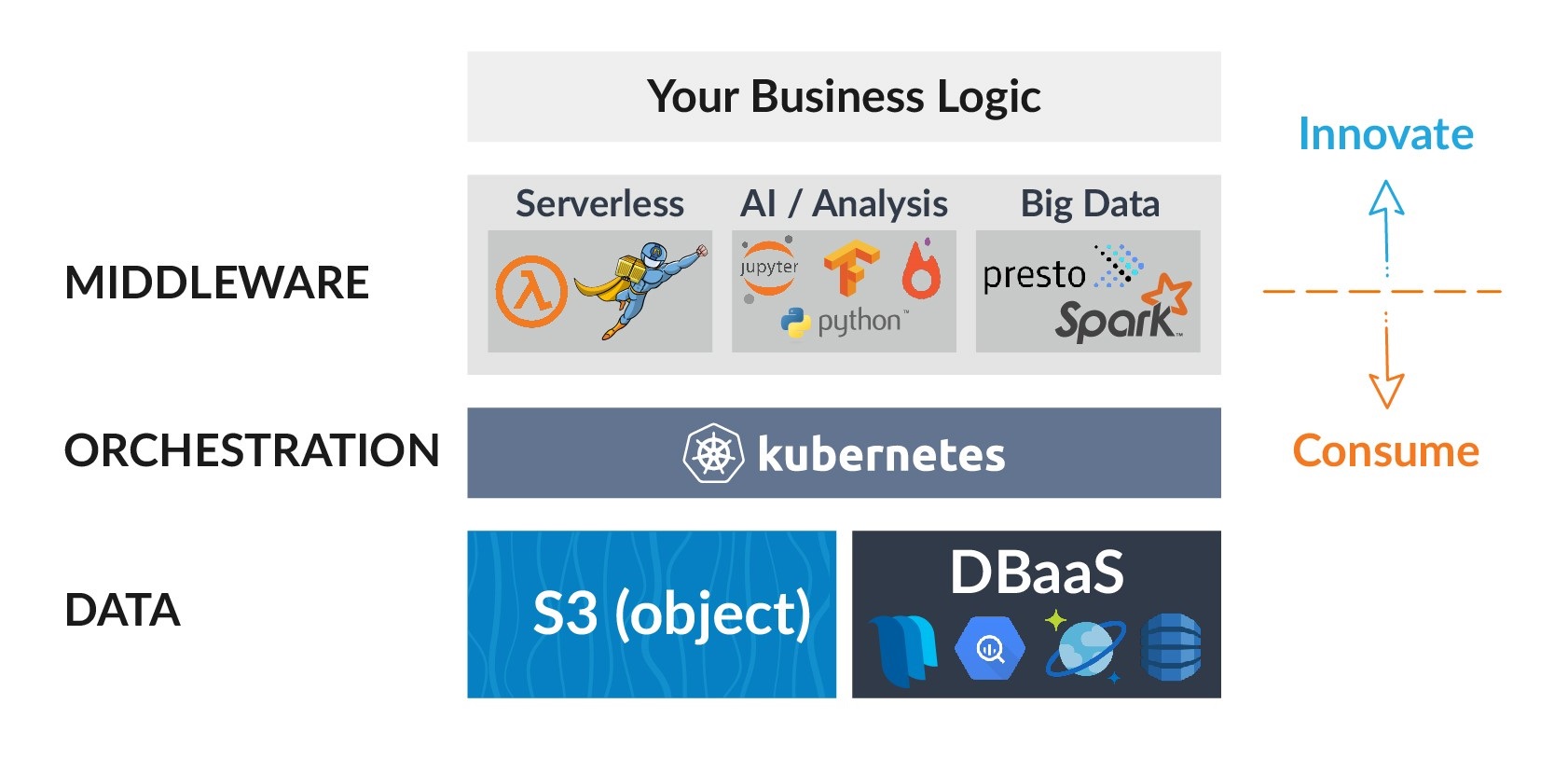

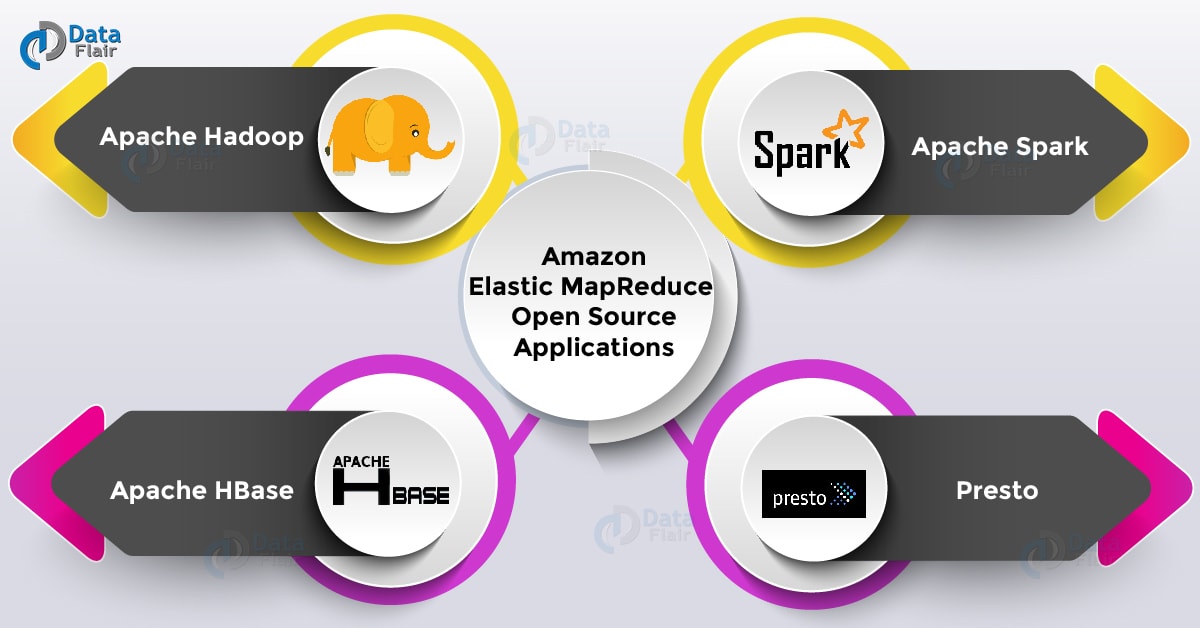

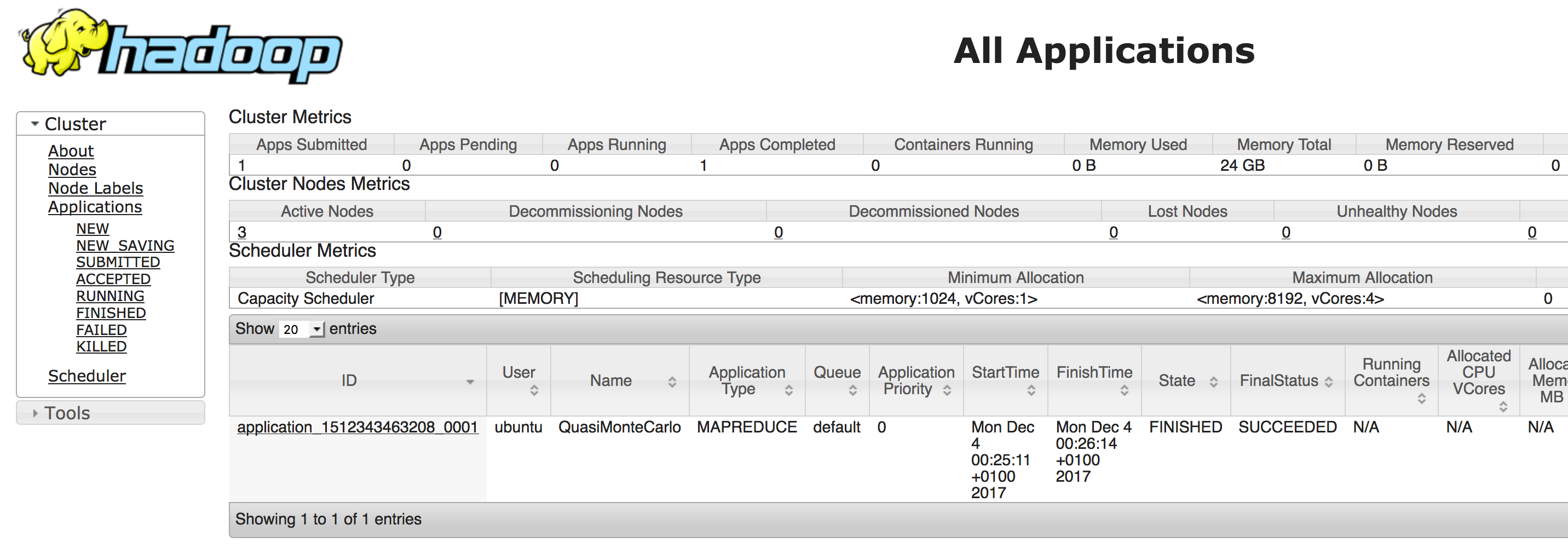

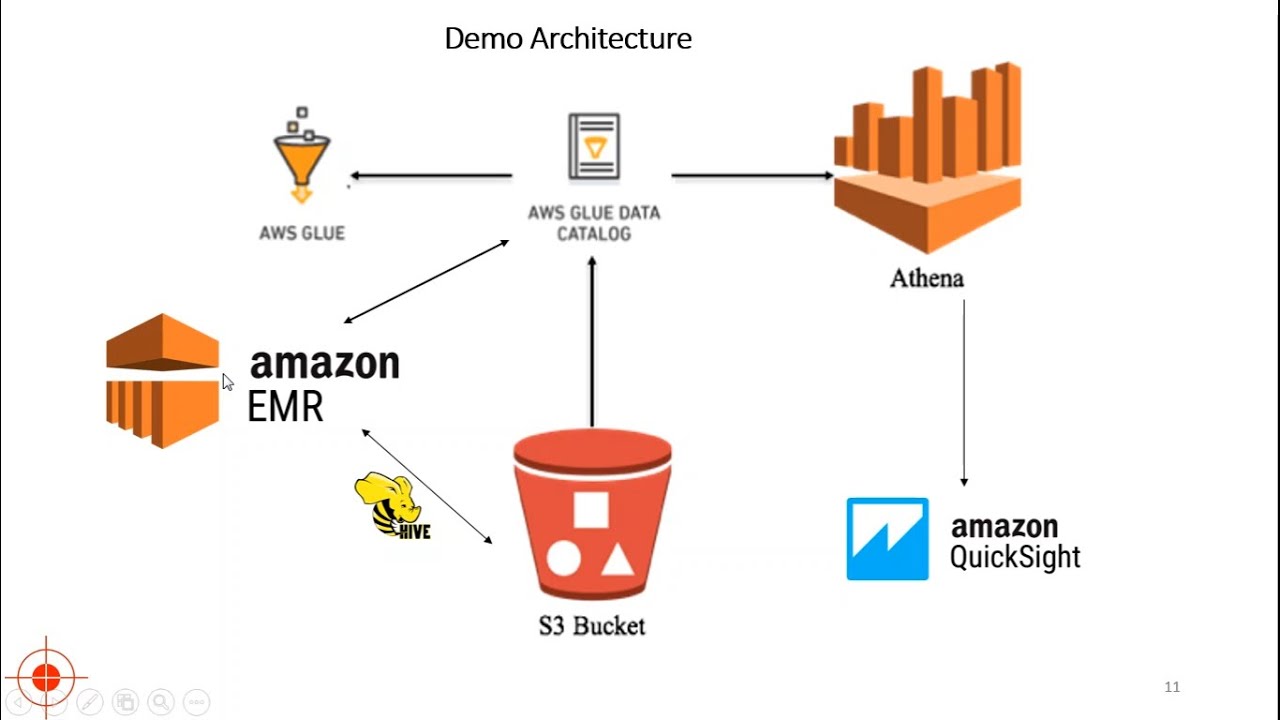

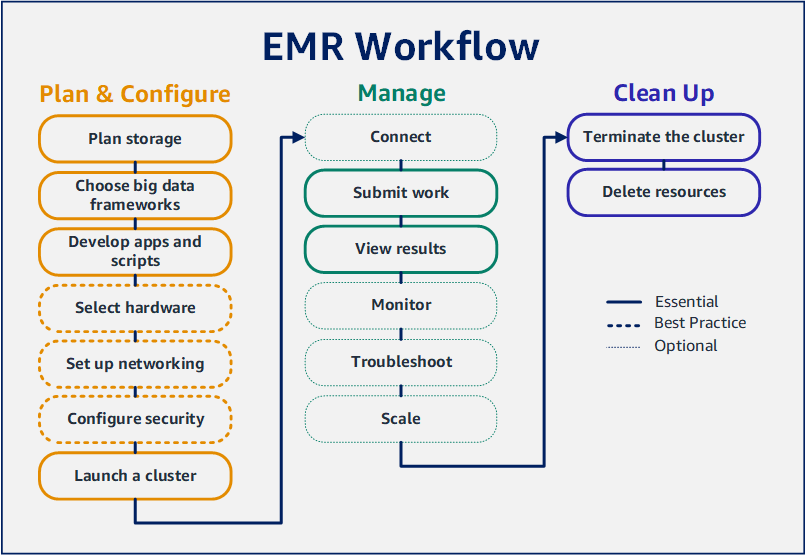

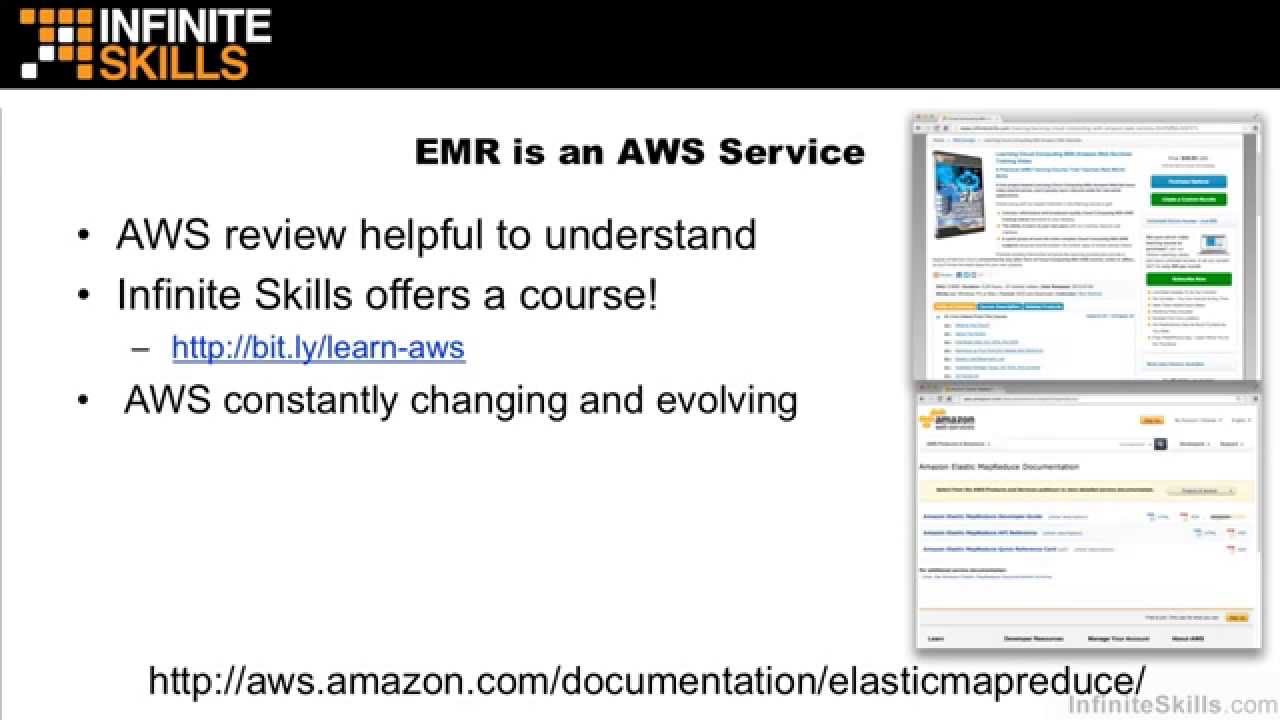

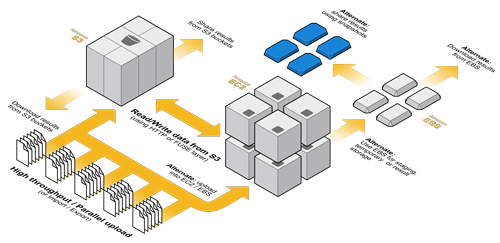

Running Hadoop on AWS Amazon EMR is a managed service that lets you process and analyze large datasets using the latest versions of big data processing frameworks such as Apache Hadoop, Spark, HBase, and Presto on fully customizable clusters. A software engineer gives a tutorial on working with Hadoop clusters an AWS S3 environment, using some Python code to help automate Hadoop's computations Automating Hadoop Computations on AWS. Launch a fully functional Hadoop cluster using Amazon EMR Define the schema and create a table for sample log data stored in Amazon S3 Analyze the data using a HiveQL script & write the results back to Amazon S3 Download and view the results on your computer.

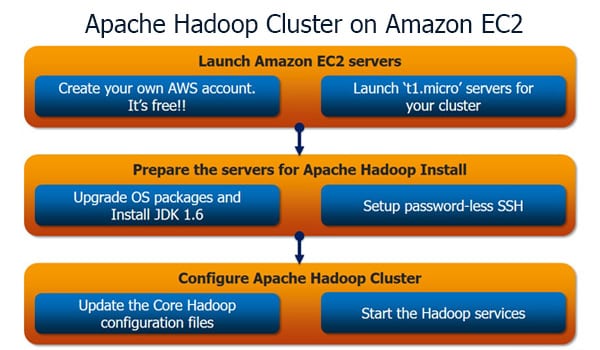

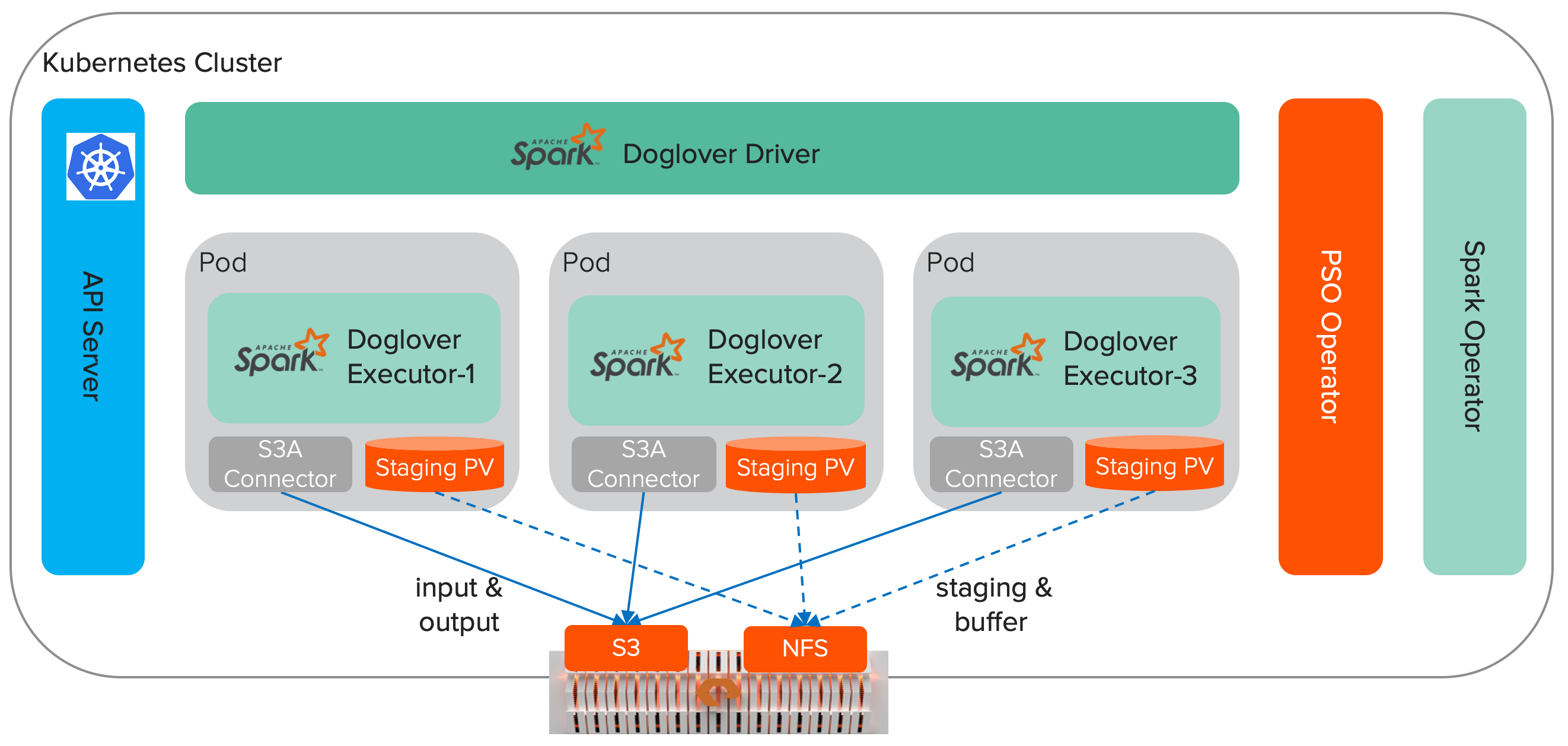

Learn to implement your own Apache Hadoop and Spark workflows on AWS in this course with big data architect Lynn Langit Explore deployment options for productionscaled jobs using virtual machines with EC2, managed Spark clusters with EMR, or containers with EKS Learn how to configure and manage Hadoop clusters and Spark jobs with Databricks. 113 You should then be signed in;. In this tutorial, we will learn how to configure an Apache Hadoop Cluster on AWS We will learn various steps for the Hadoop AWS configuration on AWS to set up the Apache Hadoop cluster We will start with platform requirements for Apache Hadoop cluster on AWS setup, prerequisites to install apache Hadoop and cluster on AWS, various software required for installing Hadoop.

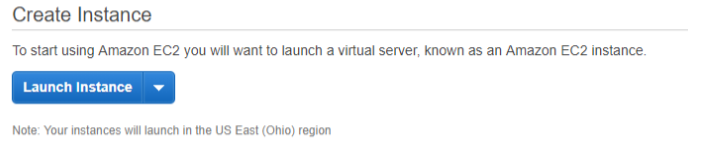

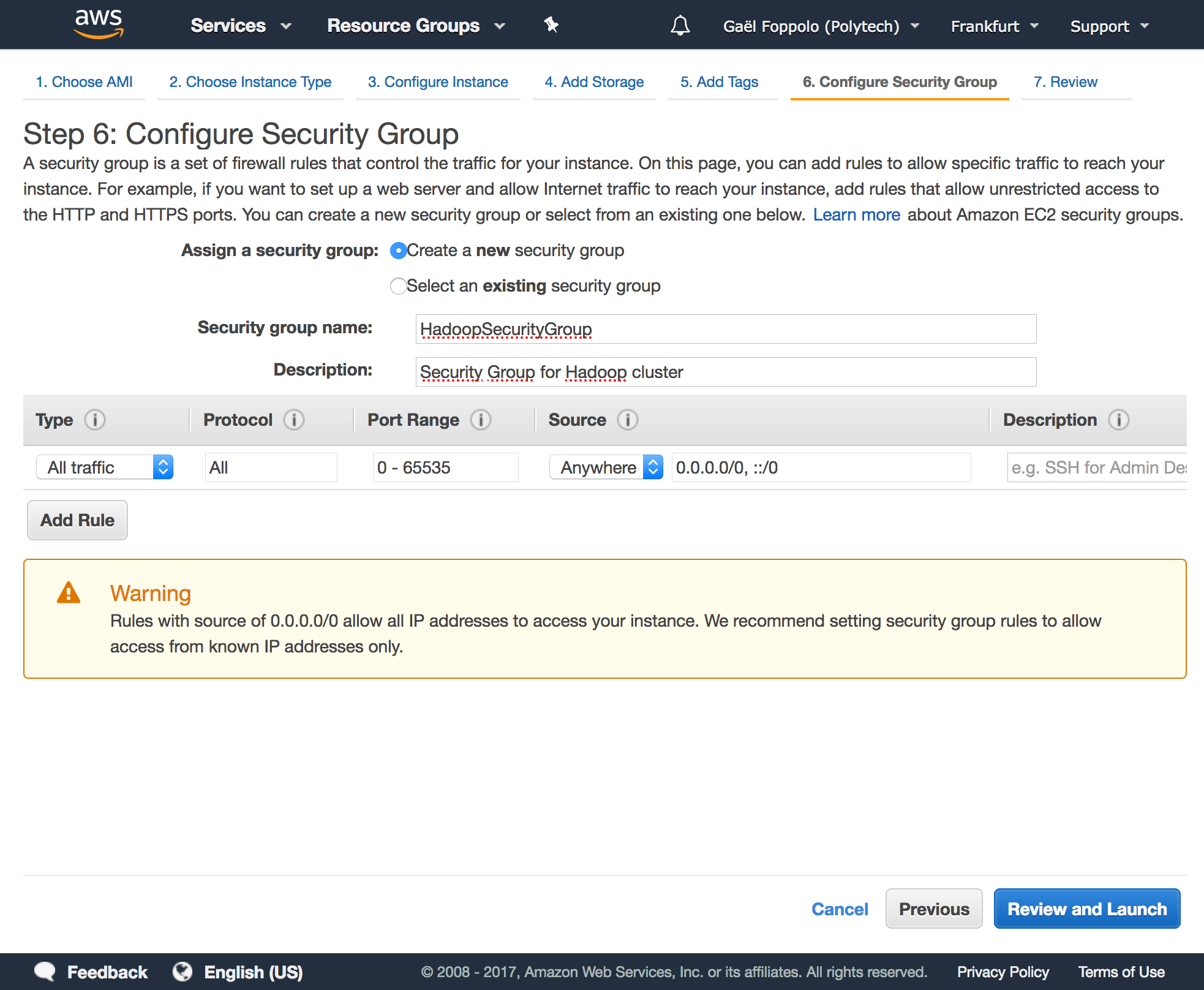

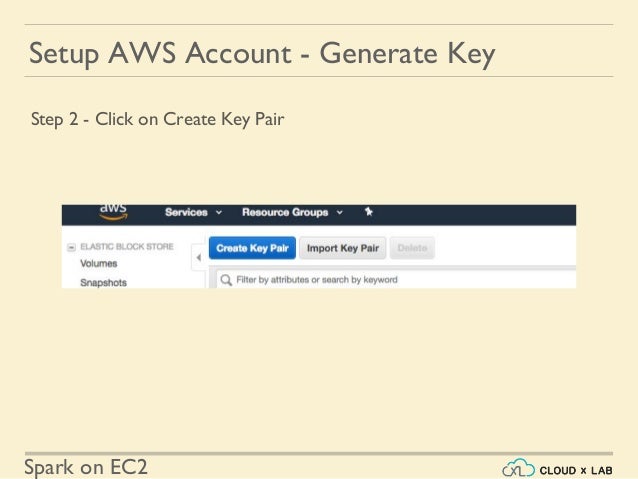

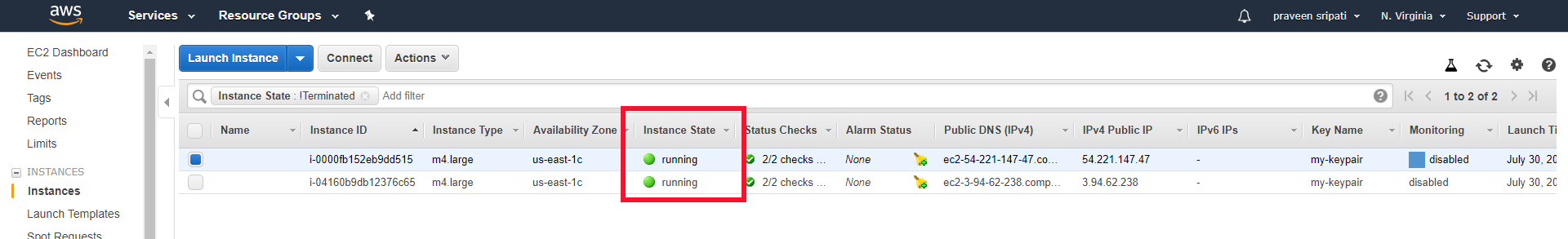

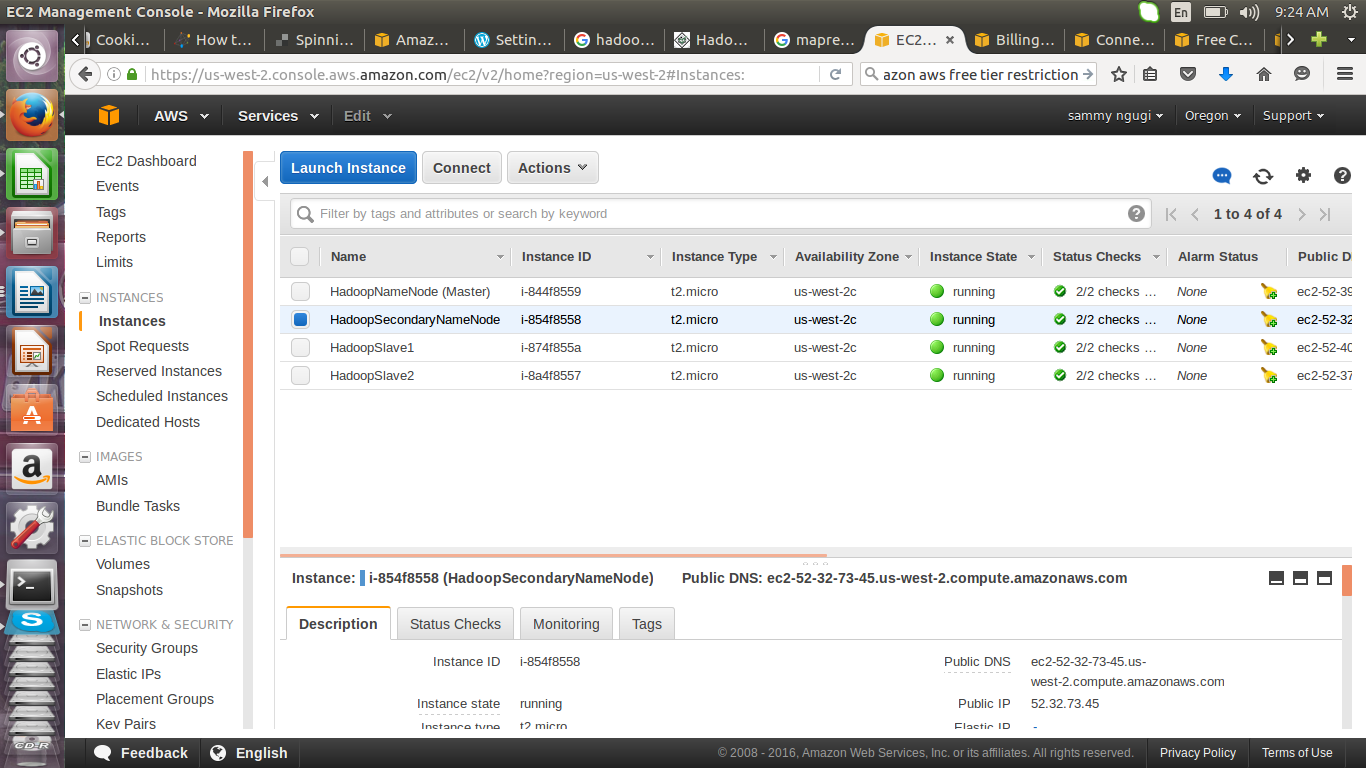

It will pull in a compatible awssdk JAR The hadoopaws JAR does not declare any dependencies other than that dependencies unique to it, the AWS SDK JAR. 12 3rdParty Software Tools 121 Option 1 Firefox addon for S3. To set up a single node cluster with Hadoop in EC2 instance on AWS, we have to first login to EC2 instance in AWS Management Console by using Login Id and Password After login to EC2 click on Instances option available at the left side of the dashboard, you will see an instance state in stopped mode Start instance by rightclicking on stopped instance > instance state > start > popup will appear asking for to start to click YES.

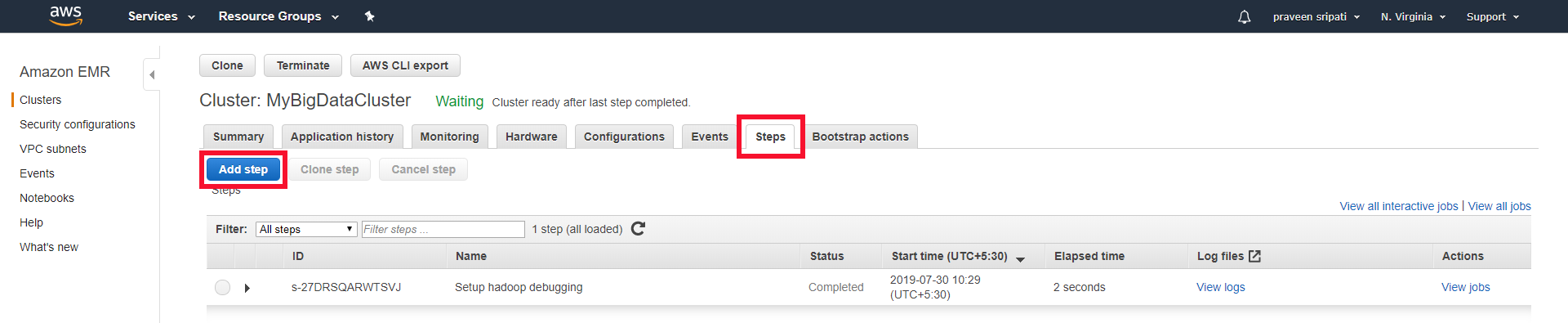

Tutorial Hadoop Pig Tutorial What is, Architecture, Example Tutorial Apache Oozie Tutorial What is, Workflow, Example Hadoop Tutorial Big Data Testing Tutorial What is, Strategy, How to test Hadoop Tutorial Hadoop & MapReduce Interview Questions & Answers. Try our Hadoop cluster;. AWS EMR Tutorial – Part 1 STEP 1 Launch EMR Cluster Try to choose the default values that your console gives you If you already have a key pair STEP 2 ACCESSING MASTER NODE In my opinion, EMR is not encouraging to access master node using SSH However, we’ll try STEP 3 TESTING SAMPLE.

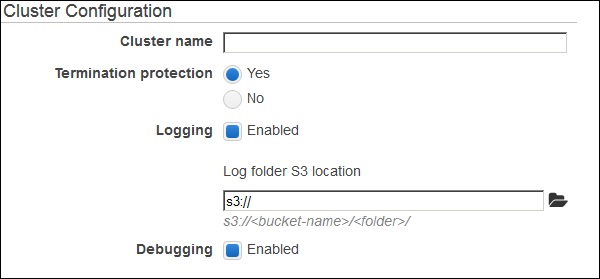

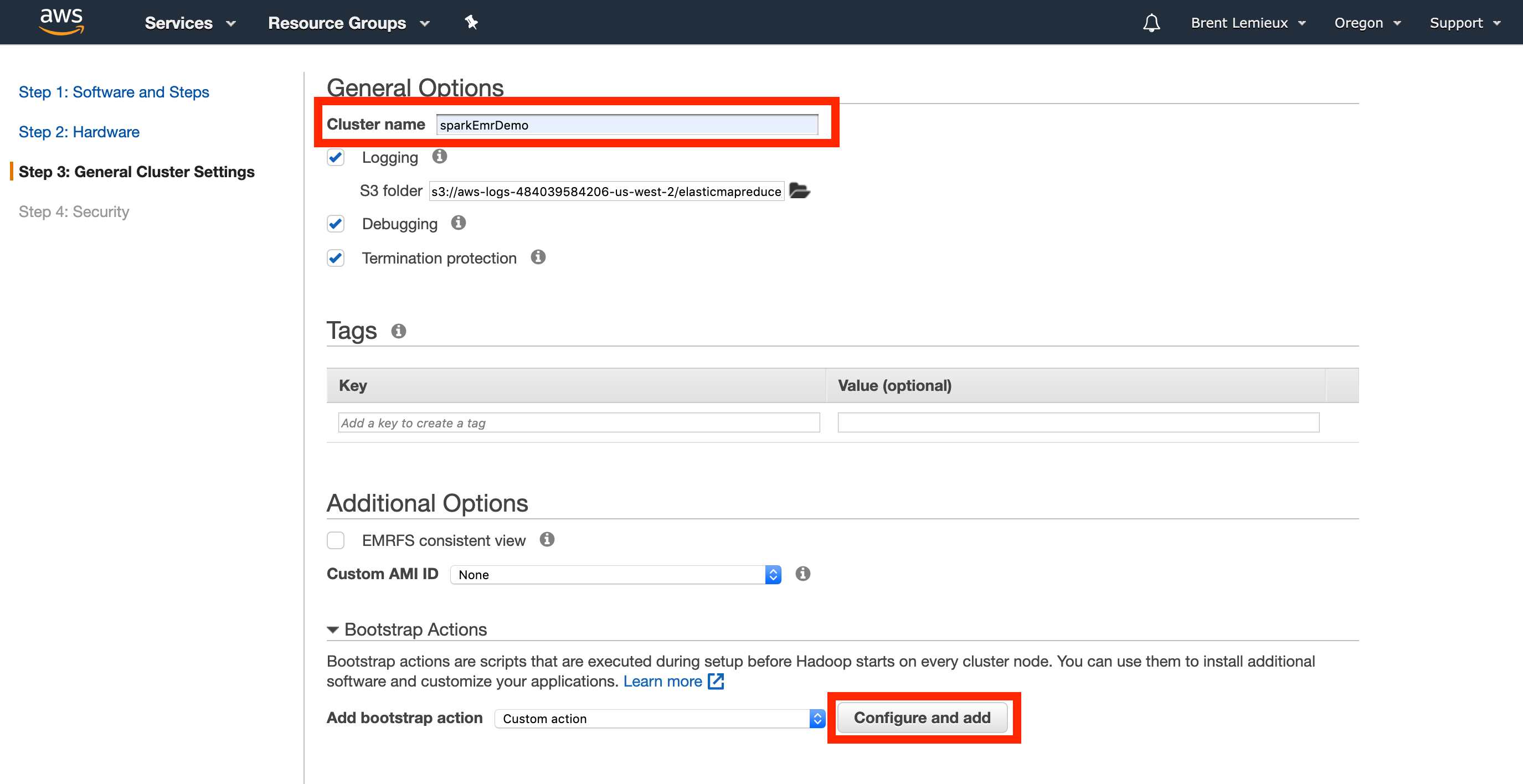

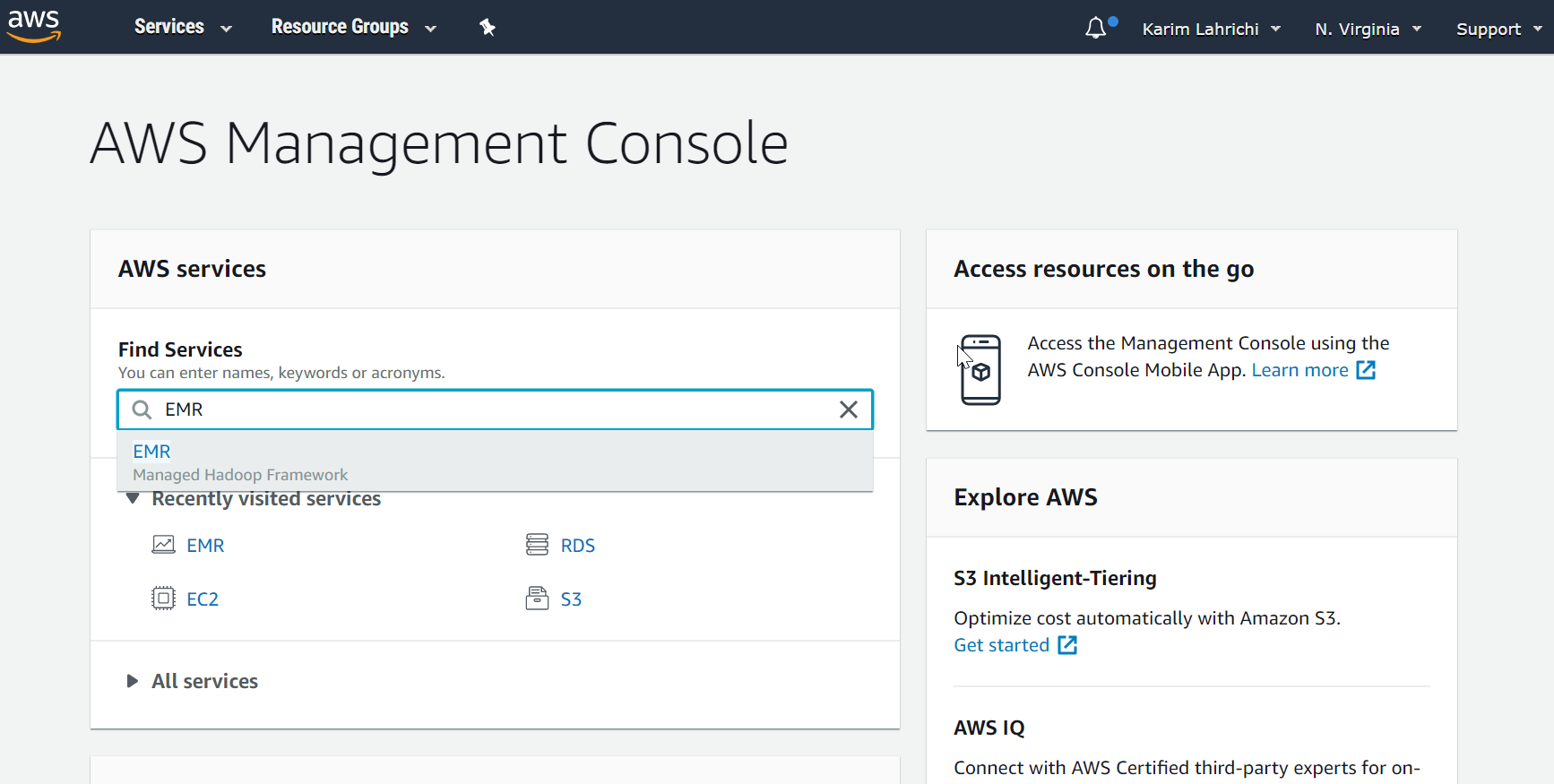

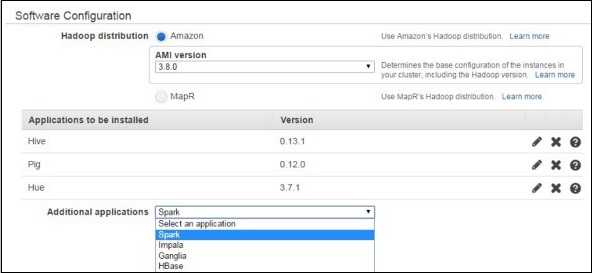

Step 1 Go to the EMR Management Console and click on “Create cluster” In the console, the metadata for the terminated Step 2 From the quick options screen, click on “Go to advanced options” to specify much more details about the cluster Step 3 In the Advanced Options tab, we can select. Command sudo yum install javadevel I am installing hadoop260 on the cluster Below command will download hadoop260 package Command wget Check if the package got downloaded Command ls Untar the file Command tar xvf hadoop260targz Make hostname as ec2user. How to setup a 4 Node Amazon cluster for Hadoop How to setup a 4 Node Amazon cluster for Hadoop.

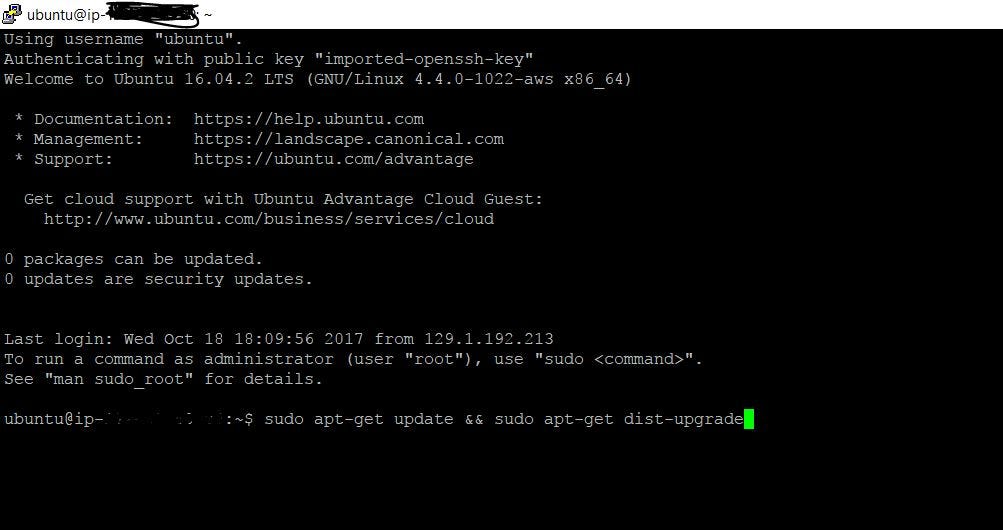

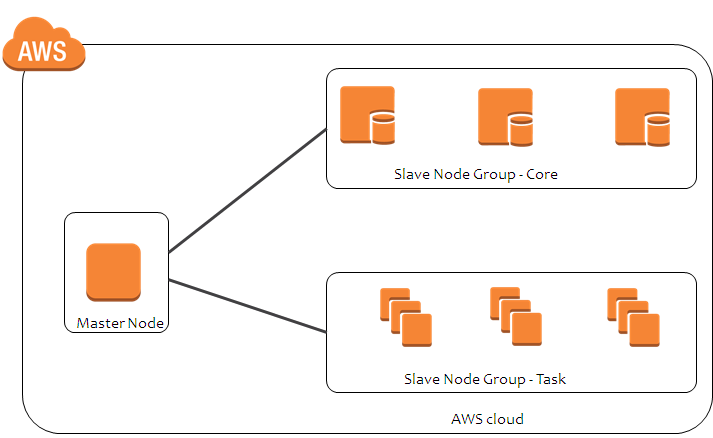

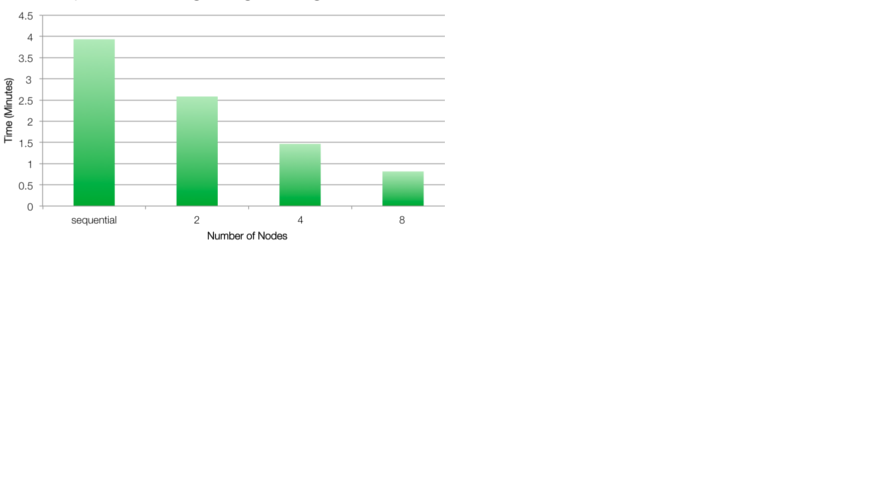

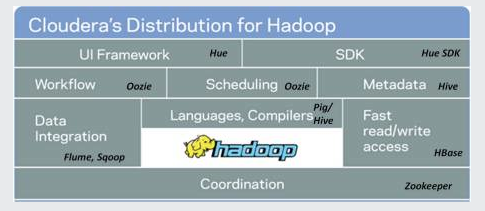

A multinode cluster setup tutorial & guide to explain how to deploy and run Hadoop in Distributed Mode In this video tutorial you will learn 1 Launch 3 instances of Ubuntu on AWS (Amazon Cloud) 2 Setup prerequisites like installation of Java, setup of passwordless ssh 3 Install Hadoop 2x (Cloudera CDH5) on master as well as all the slaves 4. This tutorial will be divided into two parts In the first part we will demonstrate how to set up instances on Amazon Web Services (AWS) AWS is a cloud computing platform that enables us to quickly provision virtual servers In the second part we will demonstrate how to install Hadoop in the four node cluster we created. Become a Certified Professional If you are aspiring to learn Hadoop in the right path, then you havelanded at the perfect place In this Hadoop tutorial article Devops AWS.

112 Sign in with your AWS account;. Tutorial Creating a Hadoop Cluster with StarCluster on Amazon AWS Setup If you have already installed starcluster on your local machine, and have already tested it on AWS, creating a Starting the 1Node Hadoop Cluster StarCluster (http//starmitedu/cluster) (v 0956) Software Tools for. For a Java class final project, we need to setup Hadoop and implement an ngram processor I have found a number of 'Hadoop on AWS' tutorials, but am uncertain how to deploy Hadoop while staying in the free tier I tried a while ago, and received a bill for over $250 USD.

Signup for Amazon Elastic Compute Cloud ( Amazon EC2 ) and Simple Storage Service (S3) They have an inexpensive pay as you go model which is great for developers who want to experiment with setting up Hadoop HDFS Cluster Next, order the book “ Hadoop the definitive guide ” and Follow the instructions in the Section “Hadoop in the cloud”. Command sudo yum install javadevel I am installing hadoop260 on the cluster Below command will download hadoop260 package Command wget Check if the package got downloaded Command ls Untar the file Command tar xvf hadoop260targz Make hostname as ec2user. Become a Certified Professional If you are aspiring to learn Hadoop in the right path, then you havelanded at the perfect place In this Hadoop tutorial article Devops AWS.

A software engineer gives a tutorial on working with Hadoop clusters an AWS S3 environment, using some Python code to help automate Hadoop's computations Automating Hadoop Computations on AWS. Tutorials Process Data Using Amazon EMR with Hadoop Streaming Import and Export DynamoDB Data Using AWS Data Pipeline Copy CSV Data Between Amazon S3 Buckets Using AWS Data Pipeline Export MySQL Data to Amazon S3 Using AWS Data Pipeline Copy Data to Amazon Redshift Using AWS Data Pipeline © 21, Amazon Web Services, Inc or its affiliates. Install Apache Hadoop 273 on all the instances Obtain the link to download from the Apache website and run the following commands We install Hadoop under a directory server in the home directory mkdir server cd server wget tar xvzf hadoop273targz.

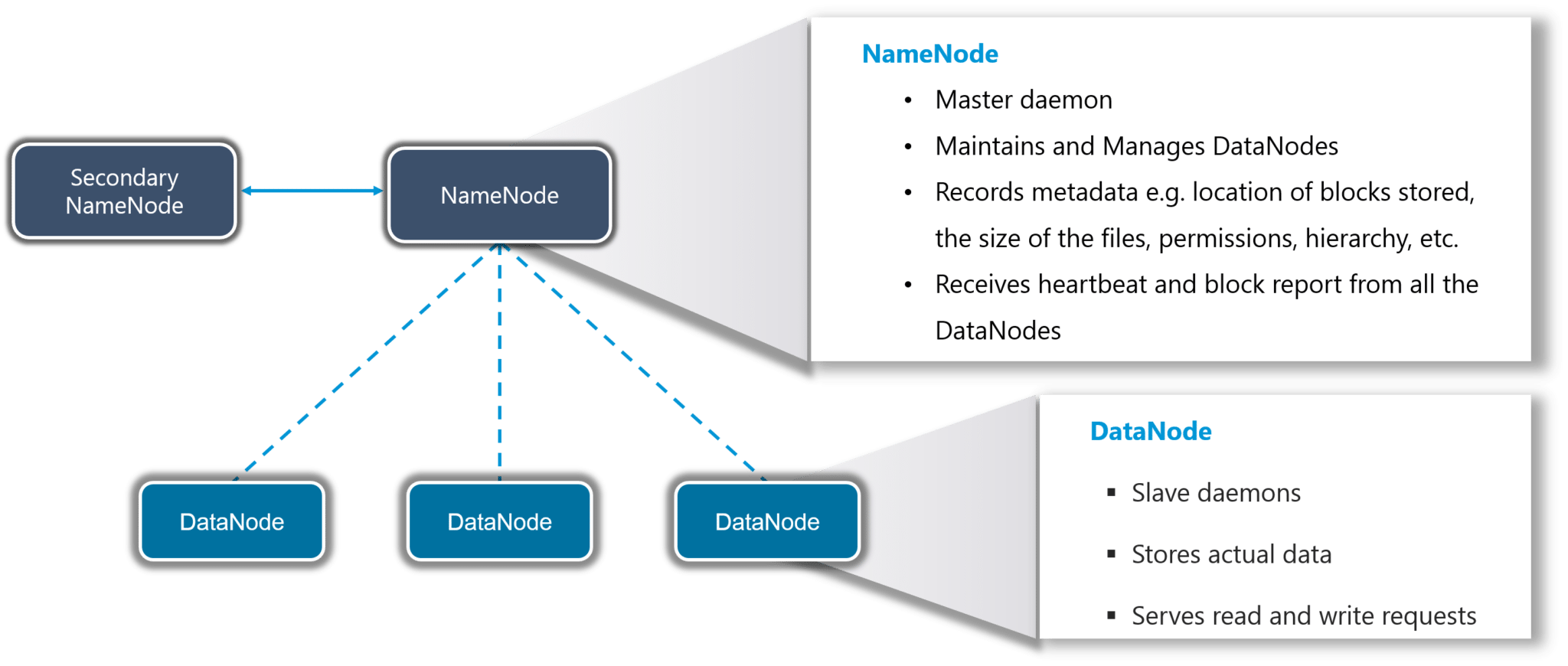

The versions of hadoopcommon and hadoopaws must be identical To import the libraries into a Maven build, add hadoopaws JAR to the build dependencies;. Hadoop Tutorial Hadoop tutorial provides basic and advanced concepts of Hadoop Our Hadoop tutorial is designed for beginners and professionals Hadoop is an open source framework It is provided by Apache to process and analyze very huge volume of data It is written in Java and currently used by Google, Facebook, LinkedIn, Yahoo, Twitter etc Our Hadoop tutorial includes all topics of Big Data Hadoop with HDFS, MapReduce, Yarn, Hive, HBase, Pig, Sqoop etc. See this new tutorial instead!.

Locate the HADOOP_URL variable and point it to where you want to pull the desired version’s binary Of course you must make sure the binary exists — either move it to your own personal S3 bucket and reference that location — or point it the Apache site knowing the instability I pointed out above and then just dealing with it. 115 Locate your Amazon credentials;. 114 SignUp for EC2, S3, and MapReduce;.

In this tutorial, we will use a developed WordCount Java example using Hadoop and thereafter, we execute our program on Amazon Elastic MapReduce Prerequisites You must have valid AWS account. To set up a single node cluster with Hadoop in EC2 instance on AWS, we have to first login to EC2 instance in AWS Management Console by using Login Id and Password After login to EC2 click on Instances option available at the left side of the dashboard, you will see an instance state in stopped mode Start instance by rightclicking on stopped instance > instance state > start > popup will appear asking for to start to click YES. Restaurant Solution – Hadoop Tutorial – awsseniorcom Fig Hadoop Tutorial – Solution to Restaurant Problem Bob came up with another efficient solution, he divided all the chefs into two hierarchies, that is a Junior and a Head chef and assigned.

Audience applications to easily use this support To include the S3A client in Apache Hadoop’s default classpath Make sure thatHADOOP_OPTIONAL_TOOLS in hadoopenvsh includes hadoopaws in its list of optional modules to add in the classpath For client side interaction, you can declare that relevant JARs must Though there are number of posts available across internet on this topic. Install Apache Hadoop 273 on all the instances Obtain the link to download from the Apache website and run the following commands We install Hadoop under a directory server in the home directory mkdir server cd server wget tar xvzf hadoop273targz. Install Apache Hadoop 273 on all the instances Obtain the link to download from the Apache website and run the following commands We install Hadoop under a directory server in the home directory mkdir server cd server wget tar xvzf hadoop273targz.

Setup & config a Hadoop cluster on these instances;. Learn to implement your own Apache Hadoop and Spark workflows on AWS in this course with big data architect Lynn Langit Explore deployment options for productionscaled jobs using virtual machines with EC2, managed Spark clusters with EMR, or containers with EKS Learn how to configure and manage Hadoop clusters and Spark jobs with Databricks. Find the variable, HADOOP_VER, and change it to your desired version number;.

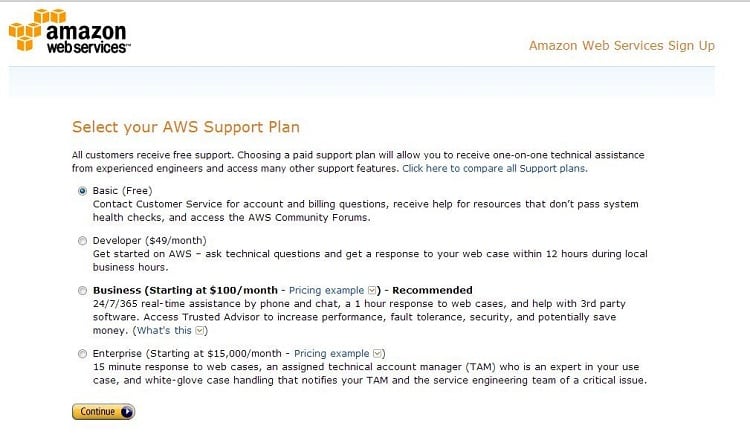

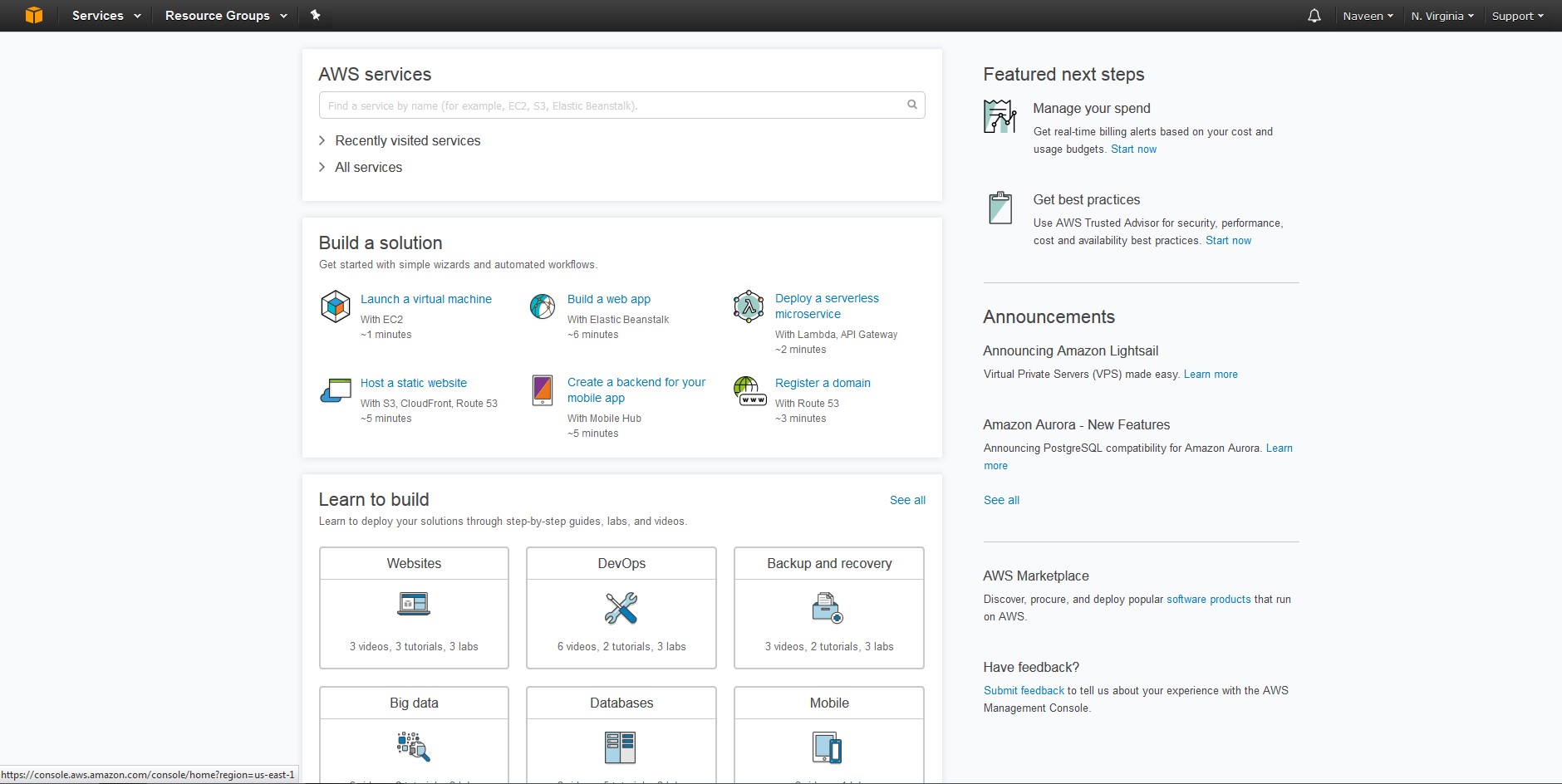

First, open an account with Amazon Web Services (AWS);. ️ Setup AWS instance We are going to create an EC2 instance using the latest Ubuntu Server as OS After logging on AWS, go to AWS Console, choose the EC2 service On the EC2 Dashboard, click on Launch Instance. This tutorial covers various important topics illustrating how AWS works and how it is beneficial to run your website on Amazon Web Services Audience This tutorial is prepared for beginners who want to learn how Amazon Web Services works to provide reliable, flexible, and costeffective cloud computing services.

Hadoop Tutorial – awsseniorcom Fig Hadoop Tutorial – Distributed Processing Scenario Failure Similarly, to tackle the problem of processing huge data sets, multiple processing units were installed so as to process the data in parallel (just like Bob hired 4 chefs) But even in this case, bringing multiple. Tutorials Process Data Using Amazon EMR with Hadoop Streaming Import and Export DynamoDB Data Using AWS Data Pipeline Copy CSV Data Between Amazon S3 Buckets Using AWS Data Pipeline Export MySQL Data to Amazon S3 Using AWS Data Pipeline Copy Data to Amazon Redshift Using AWS Data Pipeline © 21, Amazon Web Services, Inc or its affiliates. Setup & config instances on AWS;.

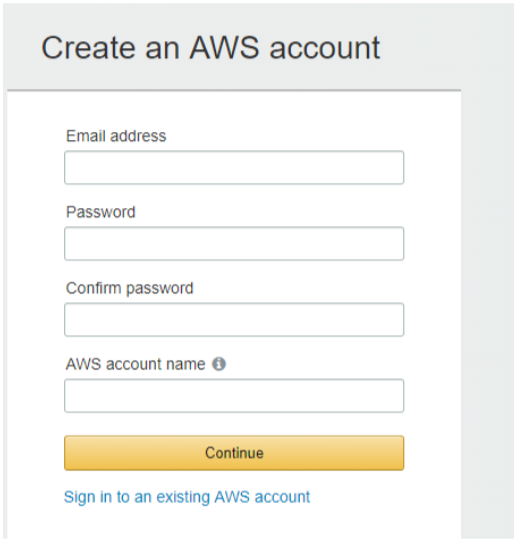

Step 1 − Sign in to AWS account and select Amazon EMR on management console Step 2 − Create Amazon S3 bucket for cluster logs & output data (Procedure is explained in detail in Amazon S3 section).

How To Create Hadoop Cluster With Amazon Emr Edureka

Learn How To Set Up A Multi Node Hadoop Cluster On Aws

Tutorial How To Upload Files Using The Aws Sdk Gem

Aws Hadoop Tutorial のギャラリー

Hadoop Tutorial 3 1 Using Amazon S Wordcount Program Dftwiki

Launch And Manage Spark Nodes On Aws Ec2 Big Data Hadoop Spark Tuto

Big Data Hadoop Tutorial Learn Big Data Hadoop From Experts Intellipaat

How To Install Apache Hadoop Cluster On Amazon Ec2 Tutorial Edureka

How To Set Up Pyspark Hadoop On Aws Ec2 Python 3 By Philomena Lamoureux Medium

Amazon Web Services Elastic Mapreduce Tutorialspoint

Amazon Emr Tutorial Apache Zeppelin Hbase Interpreters

Big Data On Aws Tutorial Big Data On Amazon Web Services Intellipaat Youtube

Hadoop Tutorial Getting Started With Big Data And Hadoop Edureka

What Is Ec2 In Aws Amazon Ec2 Tutorial Intellipaat

How To Setup An Apache Hadoop Cluster On Aws Prwatech

How To Analyze Big Data With Hadoop Amazon Web Services Aws

Learn Amazon Web Services Tutorials Aws Tutorial For Beginners

How To Create Hadoop Cluster With Amazon Emr Edureka

Setup 4 Node Hadoop Cluster On Aws Ec2 Instances By Jeevan Anand Medium

Getting Started With Pyspark On Aws Emr By Brent Lemieux Towards Data Science

Top 6 Hadoop Vendors Providing Big Data Solutions In Open Data Platform

Hadoop Cluster Setup On Aws Hadoop Administration Tutorial Hadoop Admin Training Edureka Youtube

Top 50 Aws Interview Questions Answers

Big Data Hadoop Tutorial For Disk Replacement

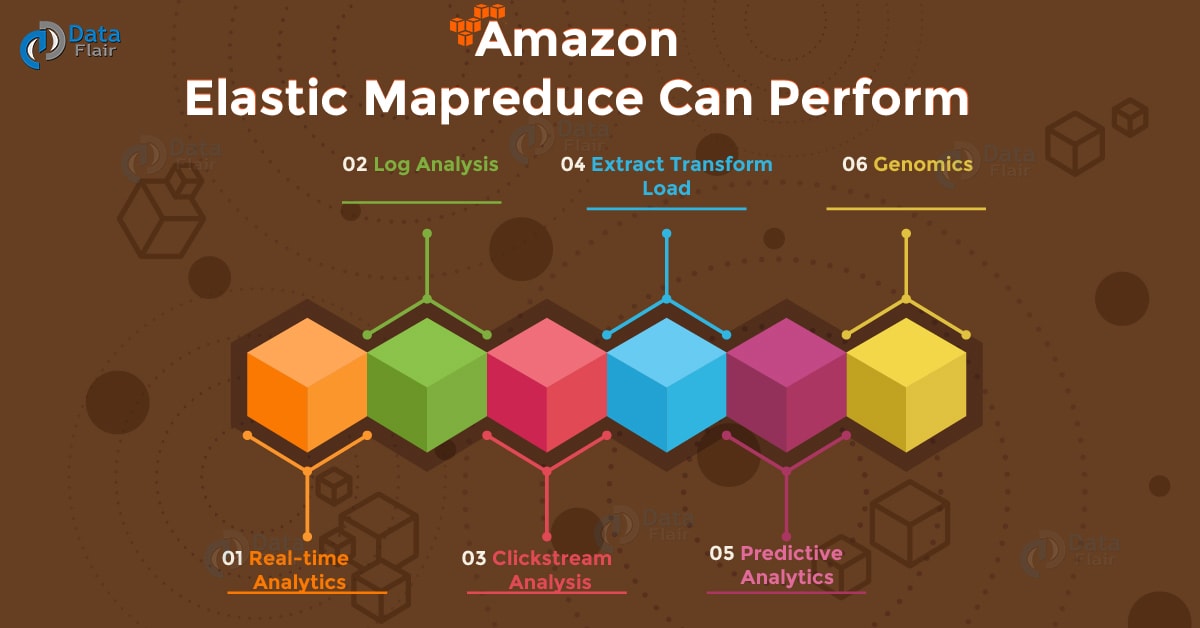

Aws Emr Tutorial What Can Amazon Emr Perform Dataflair

Let S Try Hadoop On Aws A Simple Hadoop Cluster With 4 Nodes A By Gael Foppolo Gael Foppolo

Launch And Manage Spark Nodes On Aws Ec2 Big Data Hadoop Spark Tuto

Will Kubernetes Sink The Hadoop Ship The New Stack

Aws Emr Tutorial What Can Amazon Emr Perform Dataflair

Aws Tutorial For Beginners Learn Amazon Web Services In 7 Min Dataflair

How To Setup An Apache Hadoop Cluster On Aws Ec2 Novixys Software Dev Blog

t302 Big Data Beyond Hadoop Running Mahout Giraph And R On Ama

Www Cs Duke Edu Courses Fall15 Compsci590 4 Assignment3 Mapreducetutorial Pdf

Hadoop Tutorial 3 1 Using Amazon S Wordcount Program Dftwiki

Let S Try Hadoop On Aws A Simple Hadoop Cluster With 4 Nodes A By Gael Foppolo Gael Foppolo

Migrate And Deploy Your Apache Hive Metastore On Amazon Emr Aws Big Data Blog

Apache Oozie Tutorial What Is Workflow Example Hadoop

How To Setup An Apache Hadoop Cluster On Aws Ec2 Novixys Software Dev Blog

Apache Spark With Kubernetes And Fast S3 Access By Yifeng Jiang Towards Data Science

Http Docs Cascading Org Tutorials Cascading Aws Part2 Html

Tutorials

Orchestrate Big Data Workflows With Apache Airflow Genie And Amazon Emr Part 2 Aws Big Data Blog

How To Create Hadoop Cluster With Amazon Emr Edureka

Features Of Aws Javatpoint

Learn How To Set Up A Multi Node Hadoop Cluster On Aws

Hadoop Tutorial 3 3 How Much For 1 Month Of Aws Mapreduce Dftwiki

Aws Tutorial Amazon Web Services Tutorial Javatpoint

What Is Big Data Aws Big Data Tutorial For Beginners Big Data Tutorial Hadoop Training Youtube

Installing An Aws Emr Cluster Tutorial Big Data Demystified

Implementing Authorization And Auditing Using Apache Ranger On Amazon Emr Aws Big Data Blog

Hadoop On Aws Using Emr Tutorial S3 Athena Glue Quicksight Youtube

Analyze Data With Presto And Airpal On Amazon Emr Aws Big Data Blog

Aws Emr Spark On Hadoop Scala Anshuman Guha

How To Analyze Big Data With Hadoop Amazon Web Services Aws

Installing An Aws Emr Cluster Tutorial Big Data Demystified

Map Reduce With Python And Hadoop On Aws Emr By Chiefhustler Level Up Coding

Learn The 10 Useful Difference Between Hadoop Vs Redshift

Amazon Web Services Elastic Mapreduce Tutorialspoint

Aws Route 53 Tutorial 6 Major Features Of Amazon Route 53 Dataflair

Amazon Redshift Vs Hadoop How To Make The Right Choice

Hadoop Tutorial 3 1 Using Amazon S Wordcount Program Dftwiki

Build A Healthcare Data Warehouse Using Amazon Emr Amazon Redshift Aws Lambda And Omop Aws Big Data Blog

1

Top 6 Hadoop Vendors Providing Big Data Solutions In Open Data Platform

Tutorials Dojo Answer B And C Explanation Amazon Ec2 Provides You Access To The Operating System Of The Instance That You Created Amazon Emr Provides You A Managed Hadoop Framework That

Amazon Web Services Aws Tutorial Guide For Beginner In Video Pdf

How To Install Apache Hadoop Cluster On Amazon Ec2 Tutorial Edureka

Hadoop Tutorial 3 3 How Much For 1 Month Of Aws Mapreduce Dftwiki

How To Setup An Apache Hadoop Cluster On Aws Prwatech

Articles Tutorials Amazon Web Services Computer Science Big Data Tutorial

Aws Emr Tutorial What Can Amazon Emr Perform Dataflair

Hadoop Tutorial 2 3 Running Wordcount In Python On Aws Dftwiki

Hadoop Tutorial Aws Senior Com

Tutorial Getting Started With Amazon Emr Amazon Emr

Amazon Emr Five Ways To Improve The Way You Use Hadoop

How To Setup Hadoop Cluster On Aws Using Ansible Dev Genius

Launch An Edge Node For Amazon Emr To Run Rstudio Aws Big Data Blog

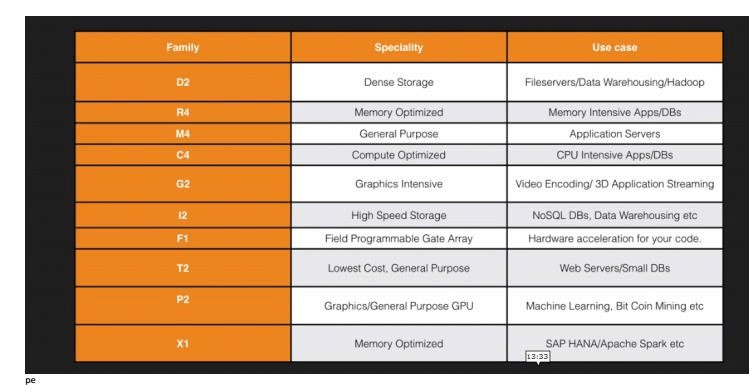

Aws Instances Machine Learning With Aws

Metadata Classification Lineage And Discovery Using Apache Atlas On Amazon Emr Aws Big Data Blog

How To Setup An Apache Hadoop Cluster On Aws Ec2 Novixys Software Dev Blog

Hadoop On Aws

Use Sqoop To Transfer Data From Amazon Emr To Amazon Rds Aws Big Data Blog

Learn How To Set Up A Multi Node Hadoop Cluster On Aws

Apache Hadoop Tutorial Hadoop In The Cloud Amazon Web Services Youtube

Learn Big Data Hadoop Tutorial Javatpoint

Map Reduce With Python And Hadoop On Aws Emr By Chiefhustler Level Up Coding

Cloud Processing With Wfdb Starcluster Amazon Ec2 Octave And Hadoop

Aws Emr Spark S3 Storage Zeppelin Notebook Youtube

How To Set Up A Multi Node Hadoop Cluster On Amazon Ec2 Part 1 Dzone Big Data

1

Using Spark Sql For Etl Aws Big Data Blog

Top 6 Hadoop Vendors Providing Big Data Solutions Intellipaat Blog

Apache Hadoop Cdh 5 Install

Tutorials

Python Training Institutes Pune For Data Analytics Classes Tutorial And Machine Learning Data Science Machine Learning Data Analytics

Aws Emr Tutorial What Can Amazon Emr Perform Dataflair

1

Big Data Hadoop Tutorial For Beginners Hadoop Module Architecture

How To Setup An Apache Hadoop Cluster On Aws Ec2 Novixys Software Dev Blog

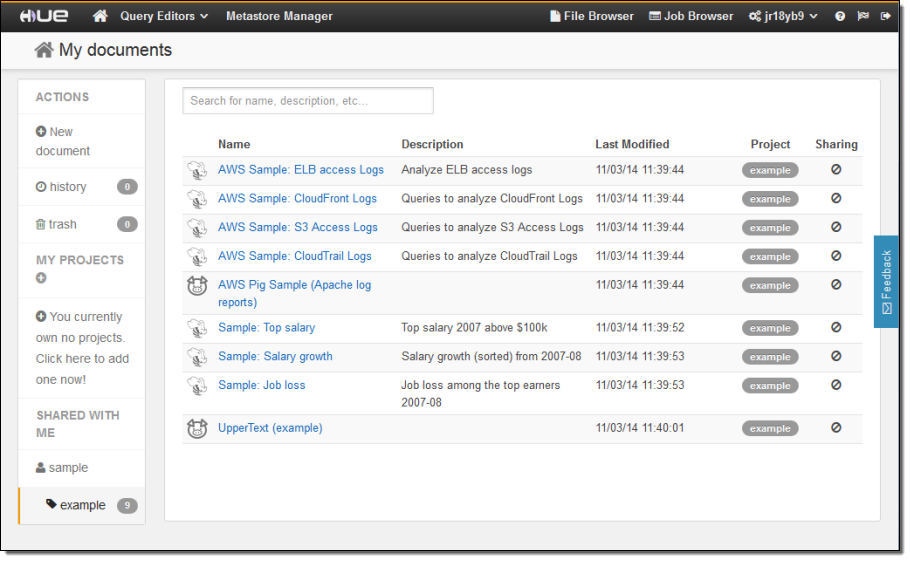

Hue A Web User Interface For Analyzing Data With Elastic Mapreduce Aws News Blog

Amazon Emr Tutorials Dojo

Snap Stanford Edu Class Cs341 13 Downloads Amazon Emr Tutorial Pdf

Amazon Emr Tutorial Apache Zeppelin Hbase Interpreters

Amazon Emr Migration Guide Aws Big Data Blog

Q Tbn And9gcq Bsuh733yu5oyd9weylwga Y8qpt8u Pt3dvqupbyxvadjj4b Usqp Cau